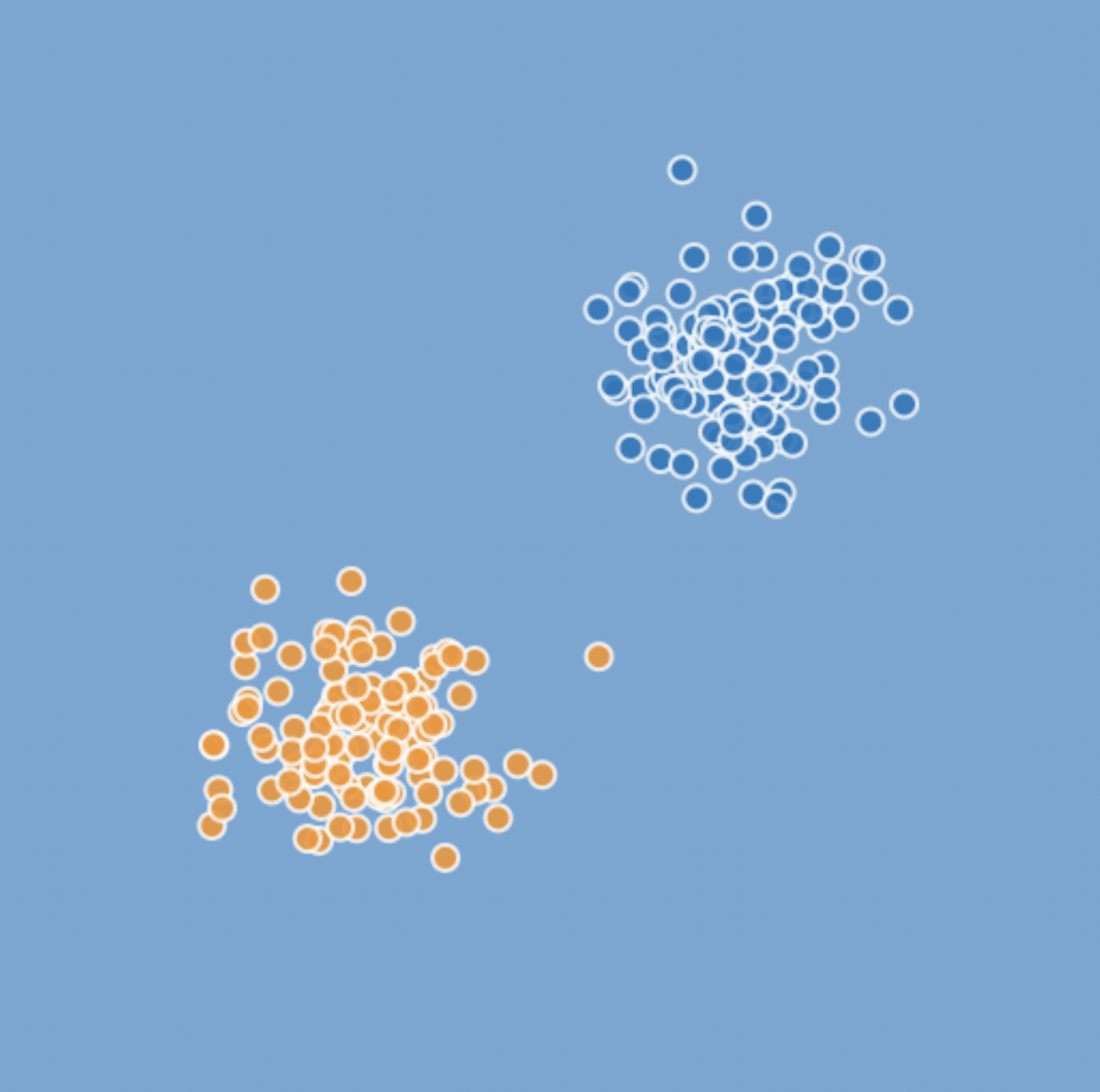

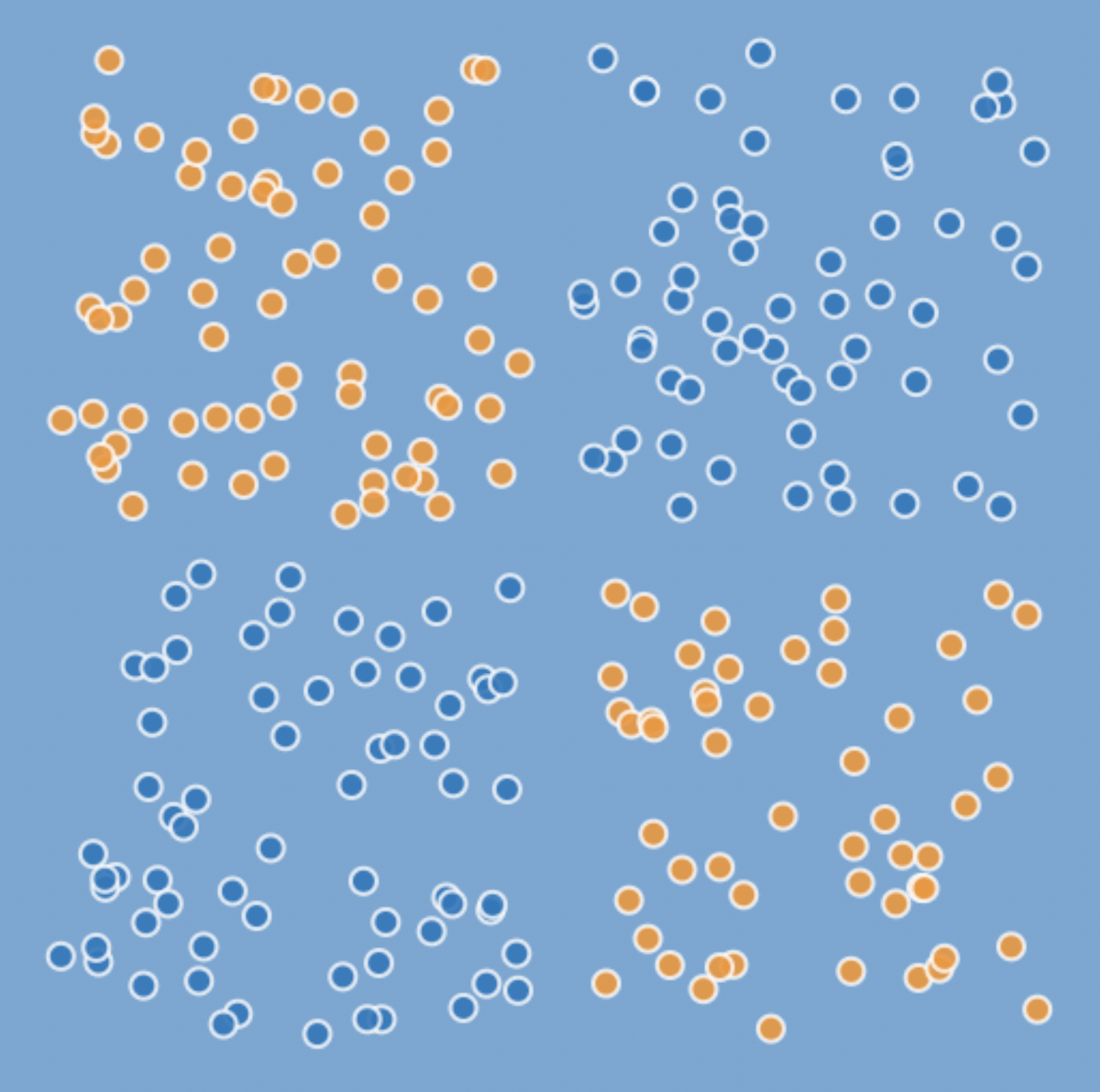

Here are the pictures of two different problems.

Two classes. We want to use a neural network to solve these problems.

What's the difference between them?

You can separate both classes from the first problem using a line.

This is a "linearly-separable" problem.

You can't use a line to separate the two classes on the second problem.

This is a much more complex problem.

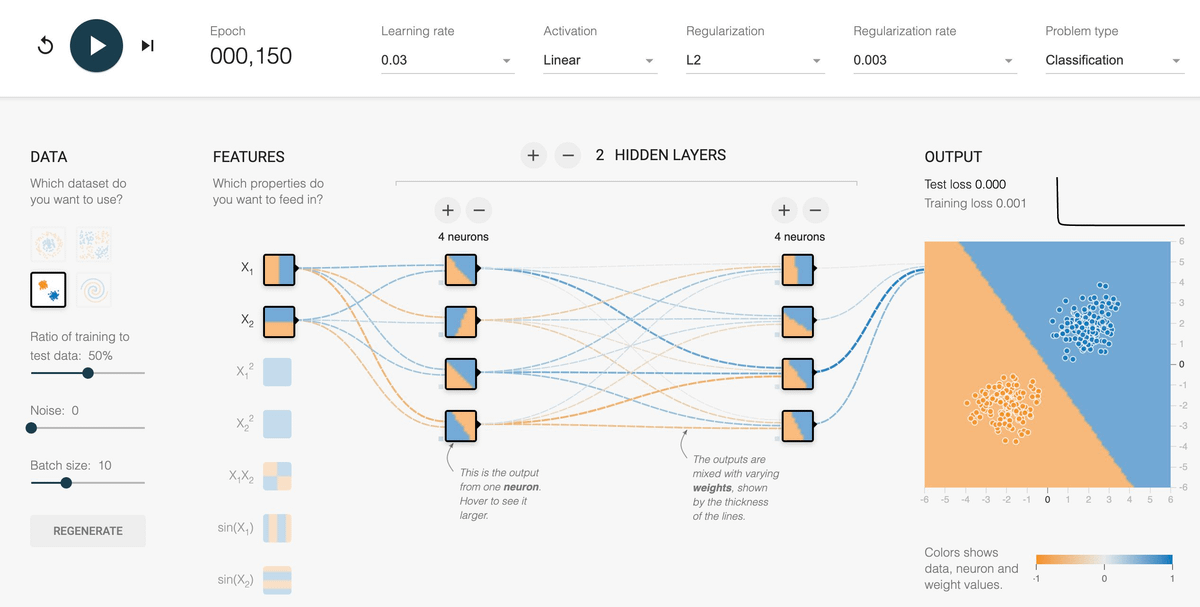

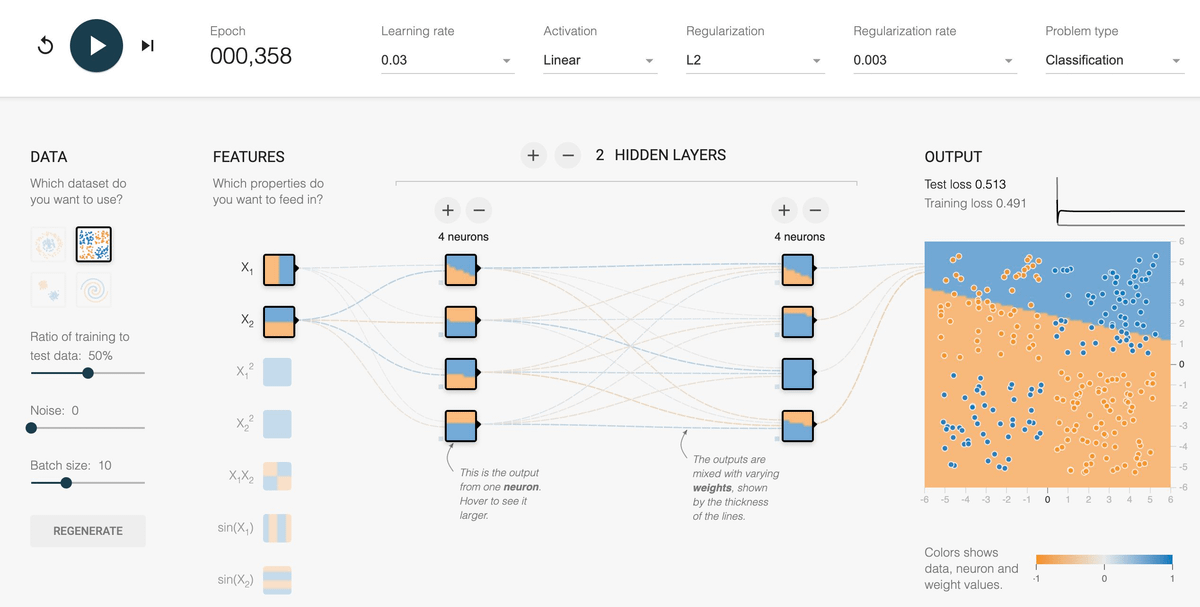

Here is a neural network with 2 hidden layers of 4 neurons.

In just a few iterations, the network finds the correct solution for the first problem.

Notice how the network uses a single dividing line in the output. That's all it can do with linear activations.

I tried the same network on the second problem.

It doesn't work.

If we want to solve this problem, we need to allow this network to find non-linear solutions.

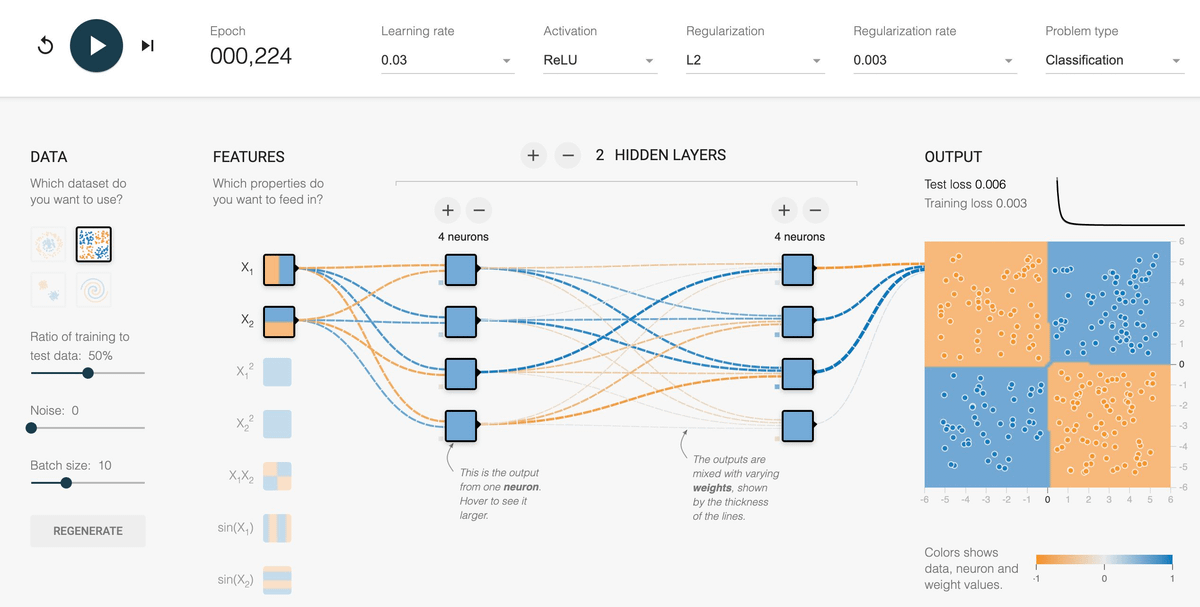

I changed the Activation from "Linear" to ReLU.

This introduces the non-linearity we need for the network to become more powerful.

Same network structure, with just a different activation.

And now we can solve the problem!

Main takeaway:

A neural network without non-linear activation functions can only find linear separation boundaries.

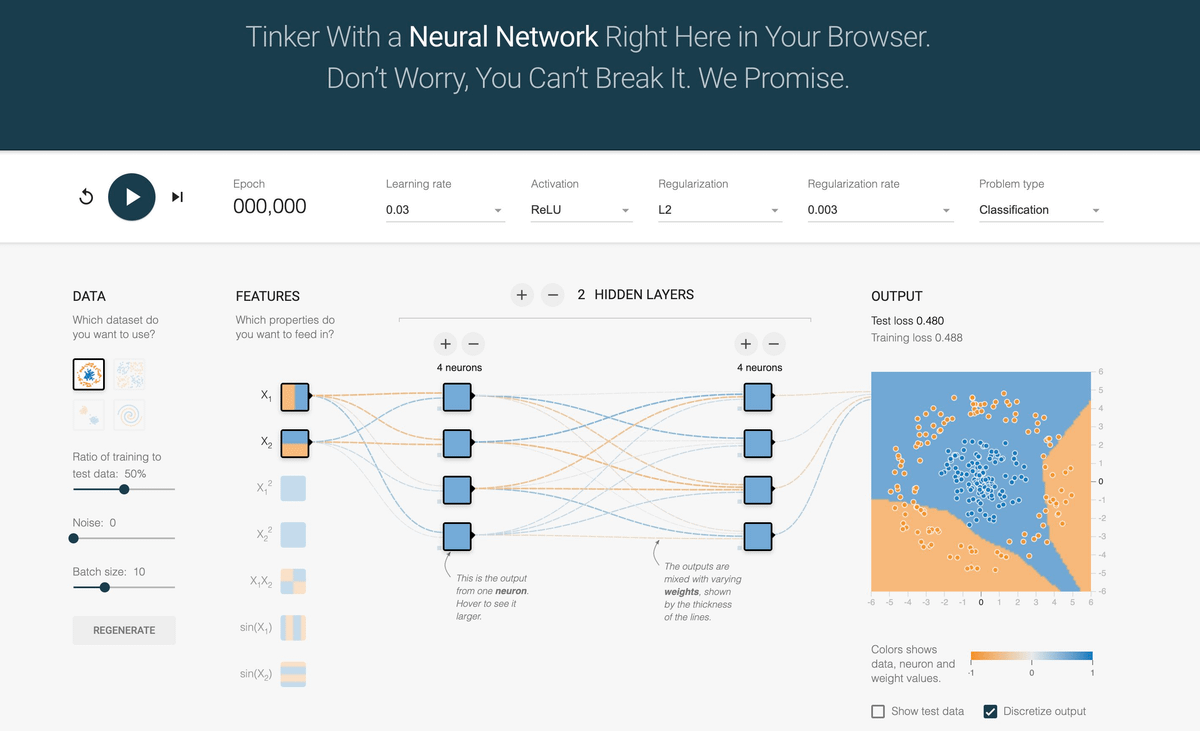

The pictures on this thread come from the TensorFlow Playground, which you can try here: playground.tensorflow.org.

Every week, I break down machine learning concepts to give you ideas on applying them in real-life situations.

Follow me @svpino to ensure you don't miss what's coming next.