Let's see how Amazon CodeWhisperer and GitHub Copilot compare!

CodeWhisperer: in preview, just announced. Python, Java, JS

Copilot: generally available, just graduated from preview. Dozens of languages(?)

I'll compare a couple scenarios, but let me know if you have test ideas👇

I'll use Python cause it's supported by both (Java's too long and I have a complicated relationship with Javascript).

CodeWhisperer and Copilot both give multiple suggestions, I'll chose the "best" one, as determined by my dum-dum brain. Suggestions will differ as they evolve!

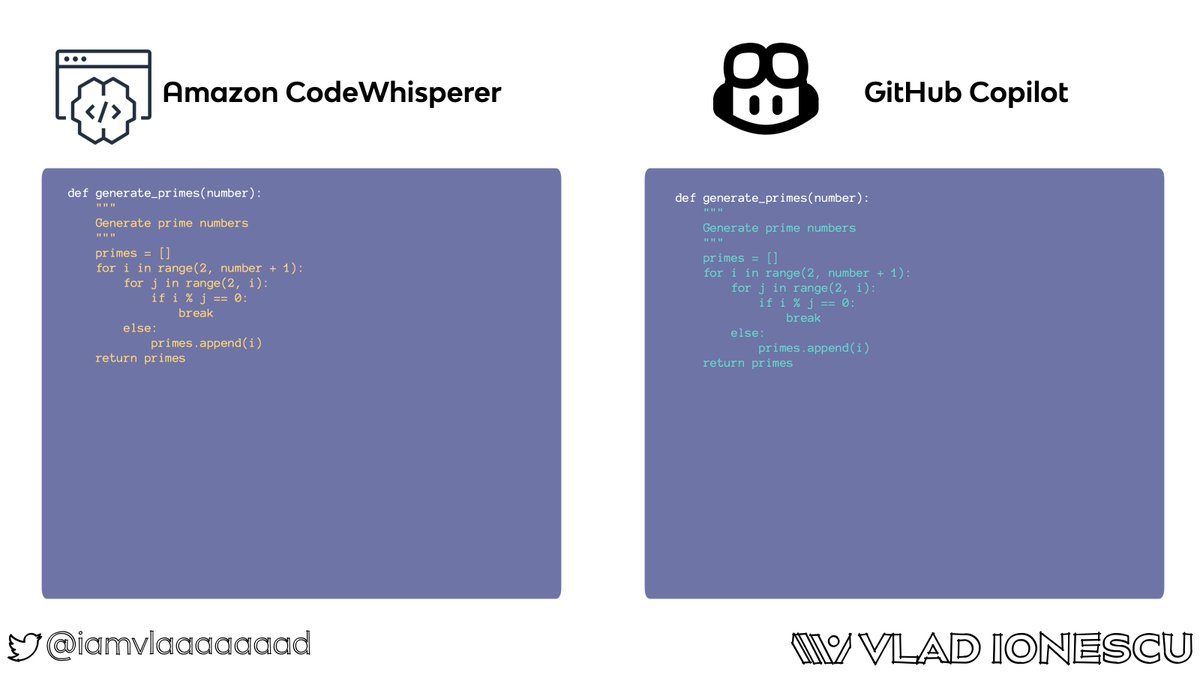

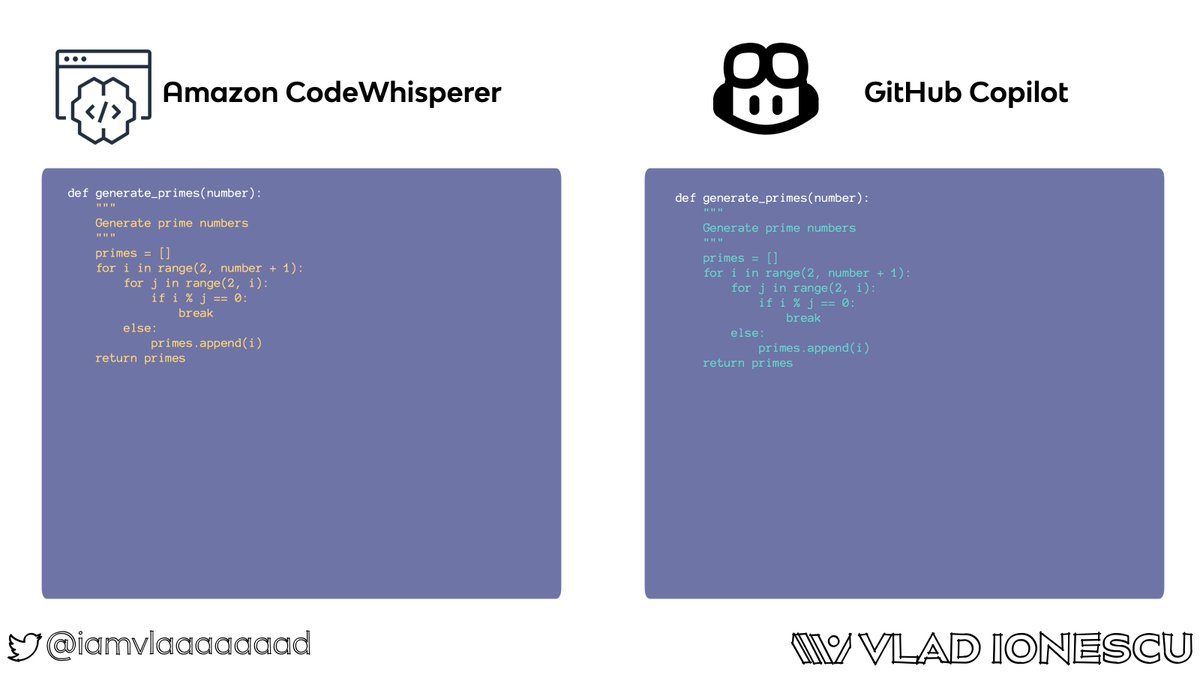

Scenario 1: prime number generation!

Both CodeWhisperer and Copilot suggest the same code: the colorful code is what each tool suggests 😆

Scenario 2: dum-dum interview questions that should have no place in the interview process!

For "Longest Substring Without Repeating Characters"... it seems that CodeWhisperer prefered code with more comments? I don't wanna look at the code cause I hate LeetCode with a passion.

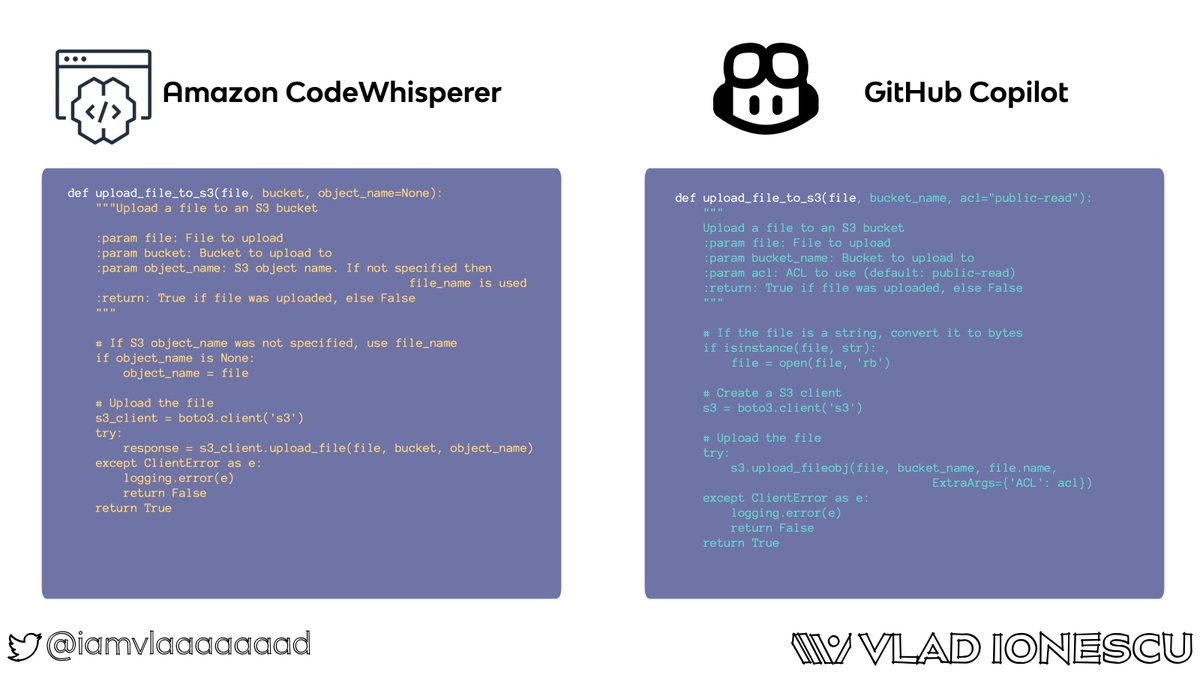

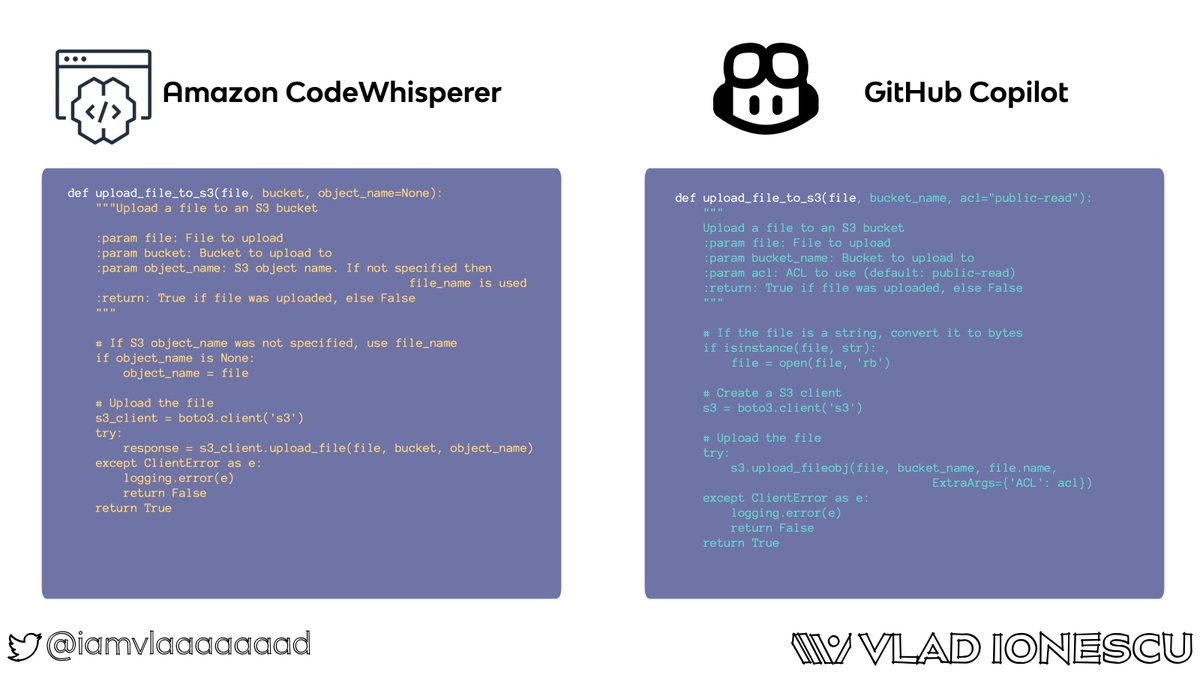

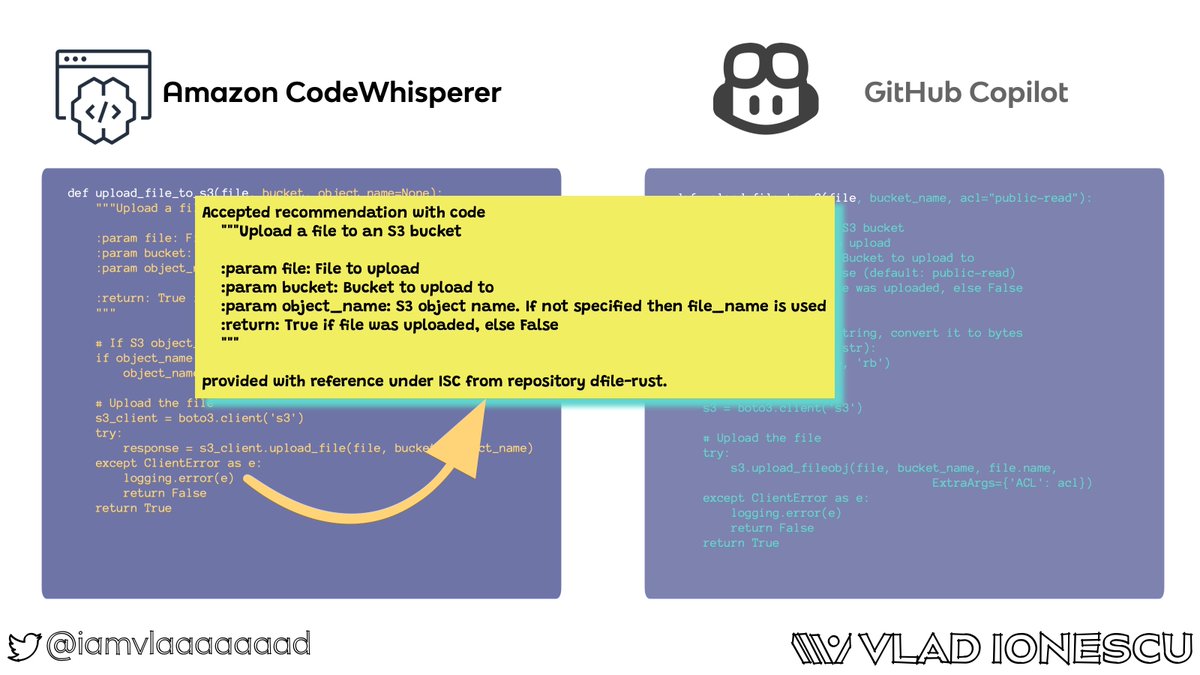

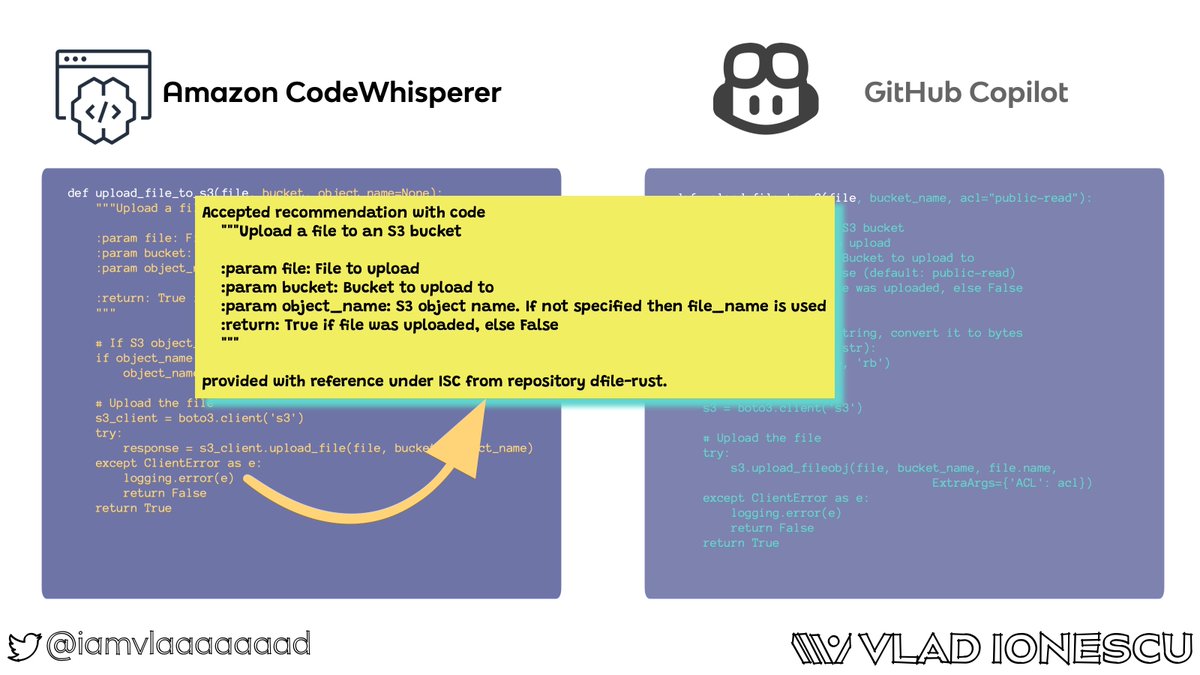

Scenario 3: uploading a file to S3!

CodeWhisperer does not initialize the s3 boto client (so trained on a bunch of Lambda code?) while Copilot wants to make the file public (so trained on tutorial code?)

Scenario 3 extra: uploading a file to S3, but with code reference!

CodeWhisperer has the awesome feature of "Reference tracker": it tells you where it got the code from!

We know that part of the S3 upload code was taken from dfile-rust! THIS is an awesome feature and I love it!

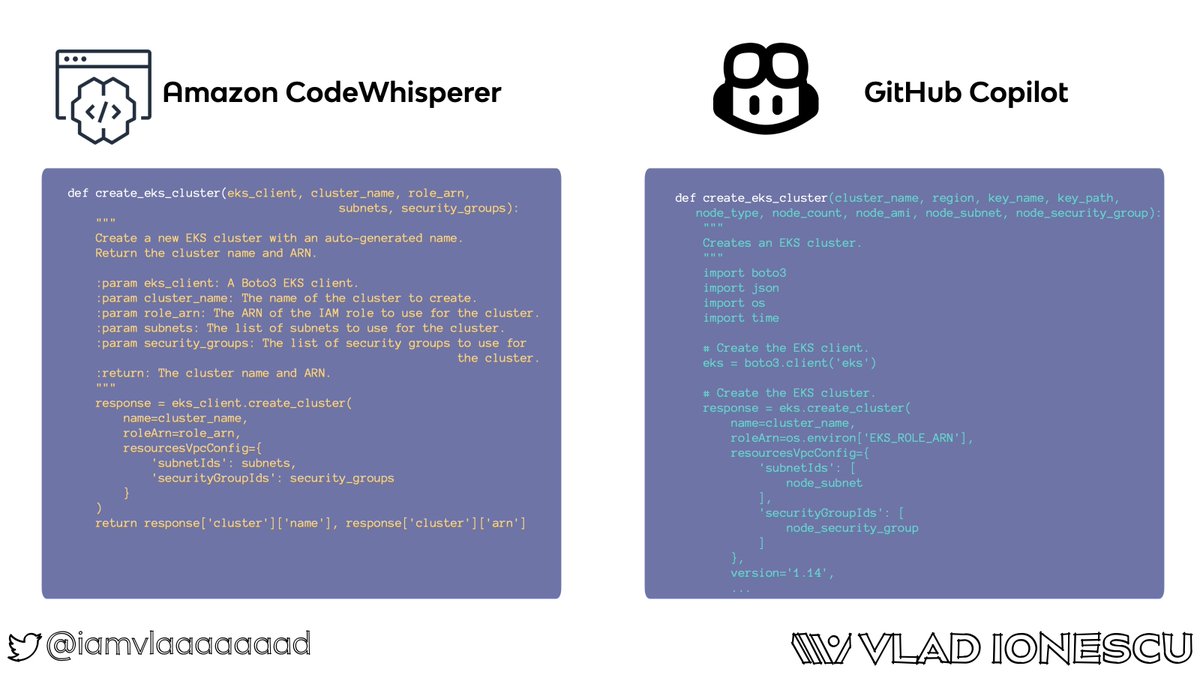

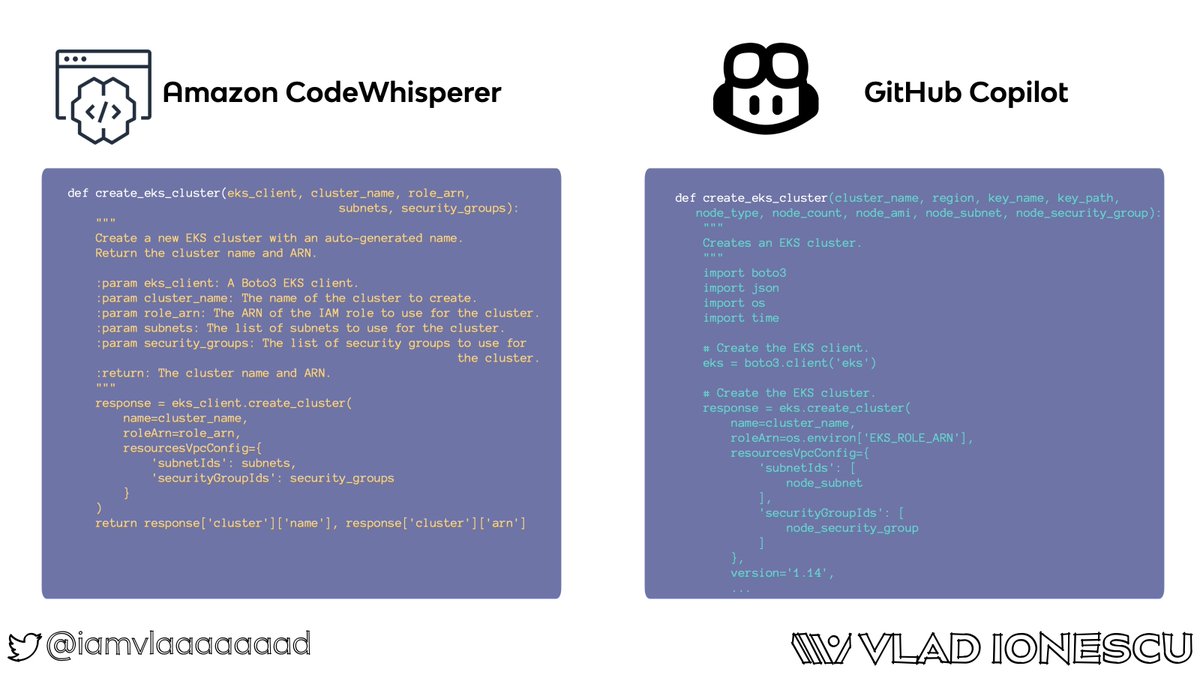

Scenario 4: EKS cluster creation!

Neither are great, but Copilot fails terribly by trying to create an EKS 1.14 cluster (current supported versions are 1.19 to 1.22).

Recency bias and manual training are where I've seen all the PoCs for Copilot stumble.

Scenario 5: send a message to SQS!

CodeWhisperer has better practices, but Copilot's example is so muuuuch more helpful for me as a developer (not the best use of attributes, but still).