Naïve Bayes is the only algorithm that is based on Bayesian probabilities!

But It assumes that features are independent of each other, that's the reason it's called "Naïve".

Here are the 5 interesting points you know about this algo...

1. Probabilistic Classifier:

Naïve Bayes is a probabilistic machine learning algorithm used for classification tasks. It predicts the probability of an instance belonging to each class and then assigns the instance to the class with the highest probability.

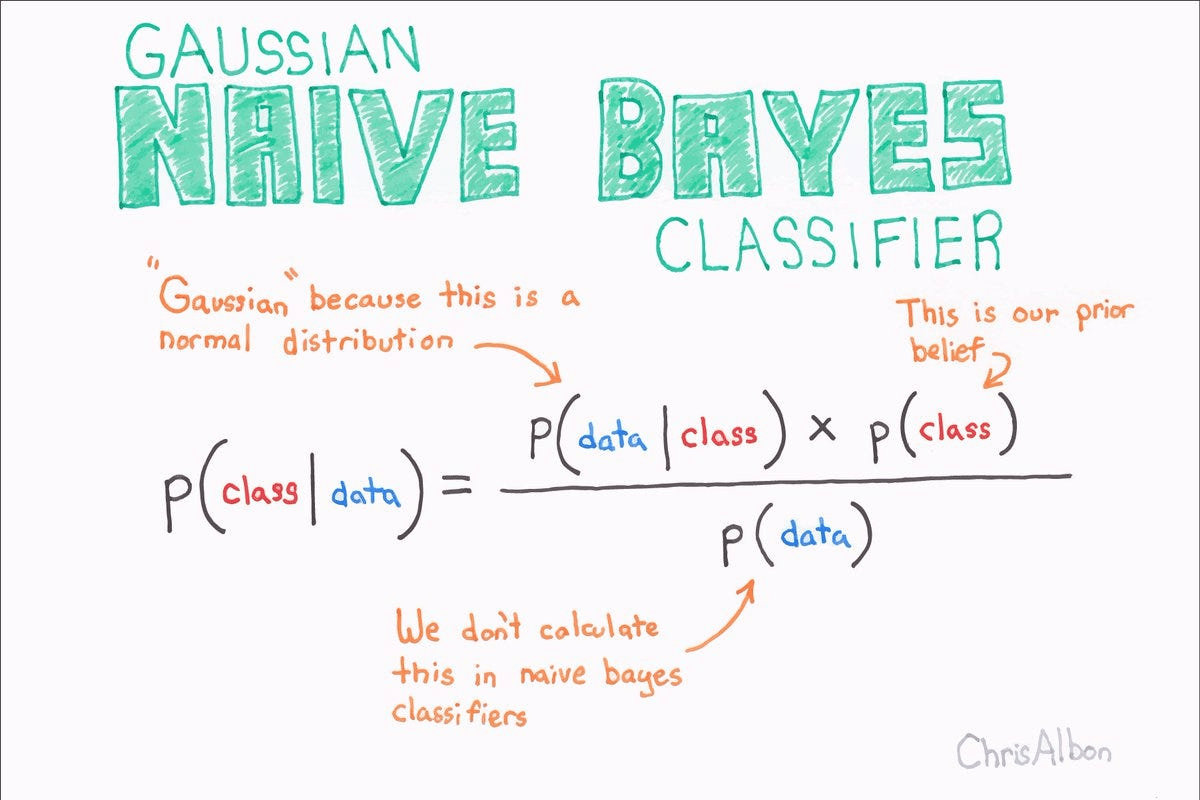

2. Bayes' Theorem: The algorithm is based on Bayes' theorem, a fundamental concept in probability theory. Bayes' theorem helps us calculate the probability of a hypothesis (in this case, a class label) given the evidence (feature values in the instance).

3. Independence Assumption: "Naive" in Naive Bayes comes from the assumption of feature independence.

It assumes that all the features (input variables) are independent of each other, meaning the presence or absence of one feature does not affect the presence or absence of another feature.

4. Training Phase: During the training phase, the algorithm learns from labeled data.

It calculates the prior probabilities of each class (how often each class appears in the training data) and the likelihood probabilities of each feature for each class (how often a particular feature value appears in instances of a specific class).

5. Prediction Phase: In the prediction phase, the algorithm takes a new instance with its feature values. It applies Bayes' theorem using the learned probabilities from the training phase to calculate the probability of the instance belonging to each class.

It then assigns the instance to the class with the highest probability as the predicted class label.