Large language models have been a buzzword over the past year due to the release of many breakthrough models🤖

These models generate great output but It costs a huge amount to run the inference!

Introducing DeciCoder - An auto-regressive code gen model with excellent throughput

DeciCoder-1B is tailored for code generation in Python, Java, and JavaScript, it uses the transformer decoder architecture.

The key USP of the model is exceptional throughput and compact memory utilization, making it cost-effective on any GPU.

Traditional methods for finding optimal neural network architectures are time-consuming and often miss efficient structures.

AutoNAC changes the game. It brings computation-friendly pathways to derive NAS-like algorithms, achieving accuracy and rapid inference.

DeciCoder ensures remarkable results even on budget-friendly GPUs.

The power of AutoNAC's innovation shines brightly, providing businesses and applications with high-quality code generation at the lowest cost possible.

Key details of DeciCoder:

Number of Parameters: 1 Billion

Training Dataset: 'The Stack' dataset (specifically curated for Python, Javascript, and Java)

Supported Coding Languages: Python, JavaScript, Java

Context Window: Spanning 2048 tokens

Technical specifications of model architecture:

Layers: 20

Embedding Size: 2048

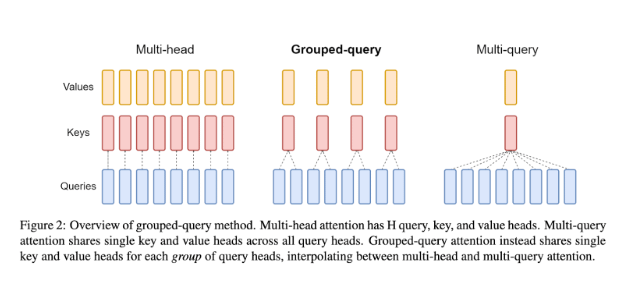

Attention Heads: 32 (Featuring GQA-8, 8 key value heads)

FFN Layer Expansion: 4.25

RoPE

Check out the model on HuggingFace at the below link and make sure you like the model👇

🔗 huggingface.co/Deci/DeciCoder-1b

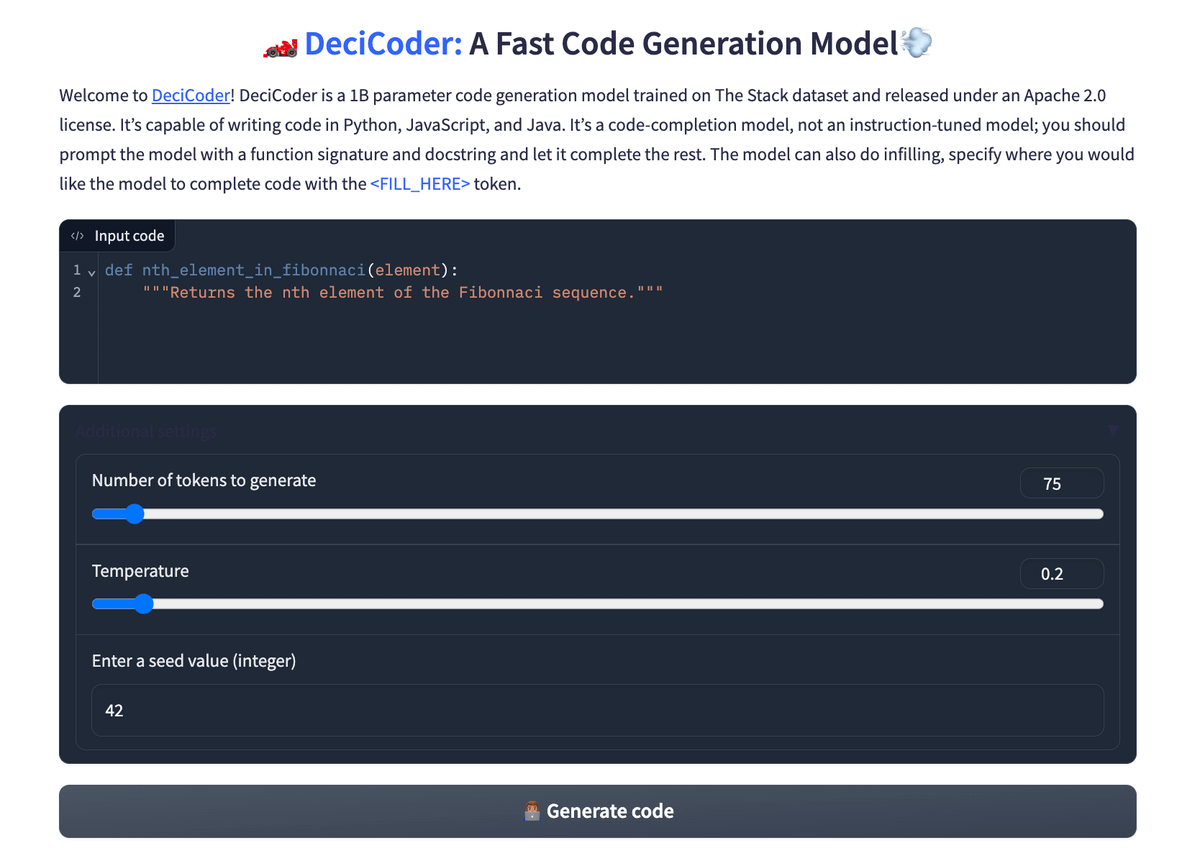

Looking to demo the model?

Check the HF space below and like/share with others👍

🔗huggingface.co/spaces/Deci/DeciCoder-Demo

Also, if you are interested in experimenting with the model then here's the full notebook with demo code👇

colab.research.google.com/drive/1JCxvBsWCZKHfIcHSMVf7GZCs3ClMQPjs#scrollTo=w6_Tmeuz5Uoi

Learn more about the "DeciCoder" from the announcement blog👇

deci.ai/blog/decicoder-efficient-and-accurate-code-generation-llm