Bagging and boosting are the most used terms in ensemble techniques🛠🤖

Here's everything you should know about these ensemble methods:

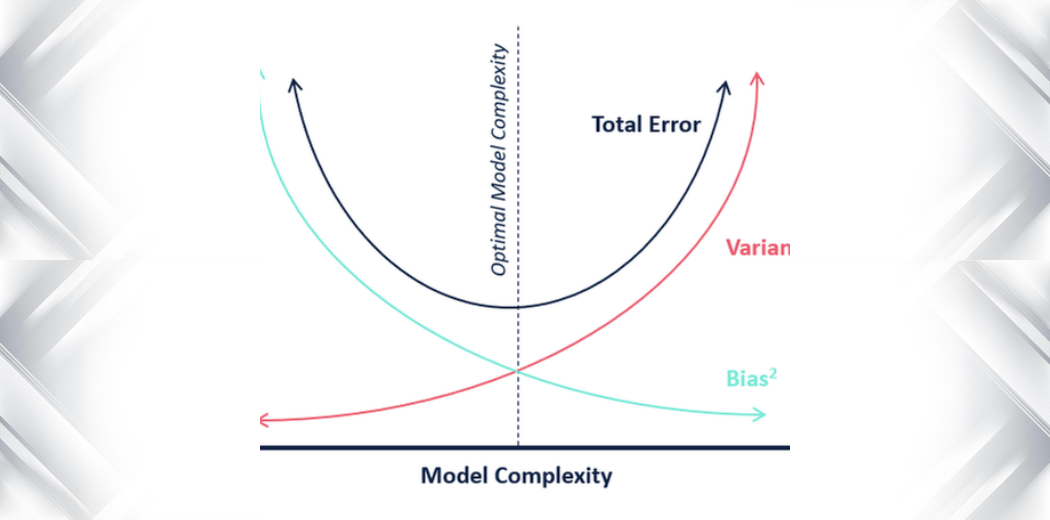

Machine learning models sometimes will overfit or underfit to the train data and fail to generalize on unseen data

The model can be biased on some samples or it may overfit to entire train data which may result in high variance on unseen data

Underfitting or overfitting results in high overall error which is a sign of bad modeling

If the model complexity is high then it will have high variance and if the model has a low complexity then the model will be highly biased

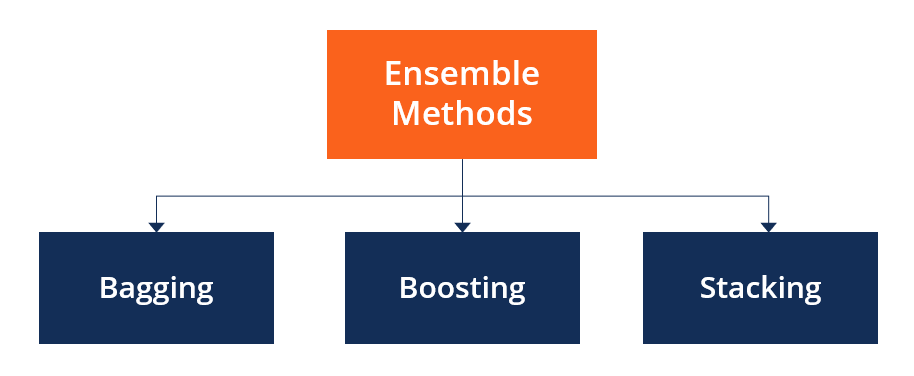

To mitigate this, we have a technique called ensemble methods. there are three major types of ensemble methods

1/ Bagging

2/ Boosting

3/ Stacking

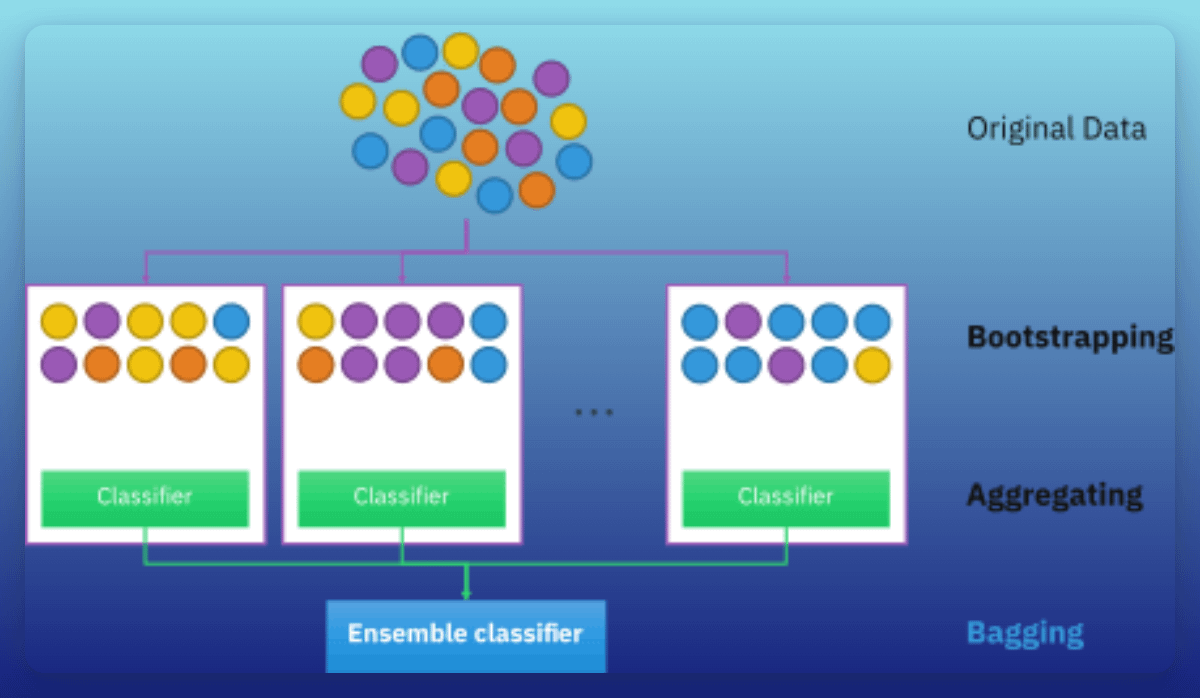

1/ Bagging

Bagging also known as Bootstrap aggregation, builds multiple models on a subset of train data and aggregates the predictions to derive the final prediction for a given instance.

It helps to reduce Variance error in ML modeling.

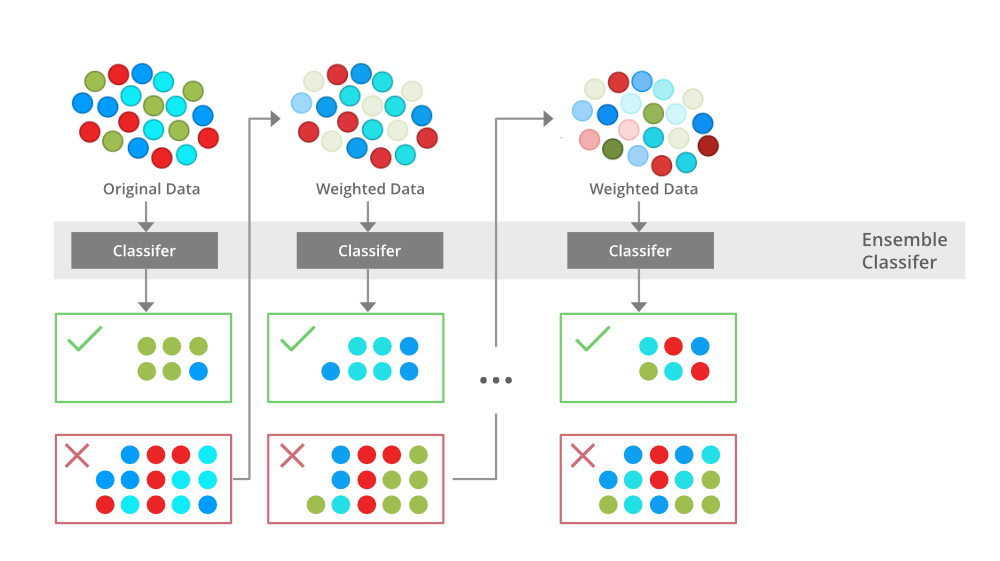

2/ Boosting

In boosting technique, the ML algorithm sequentially builds models to optimize the weights of the model with minimal error. It is used to reduce bias error in ML modeling.

Usually in both bagging and boosting base algorithm is decision tree algorithm.

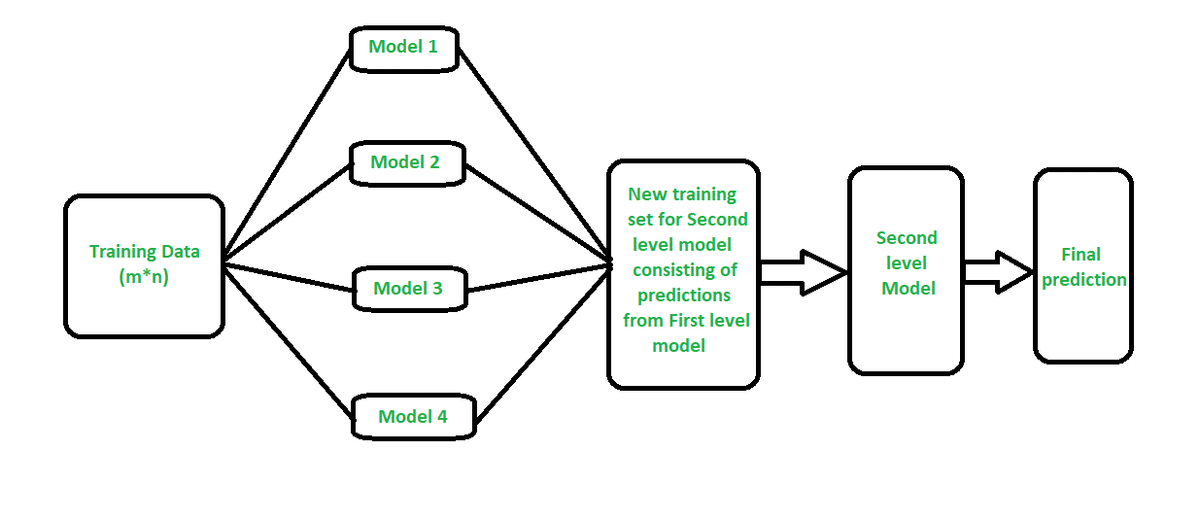

3/ Stacking

It basically combines multiple model predictions to predict the final outcome for the instance.

It helps to use a wide range of algorithms and combine the strengths of each algorithm to increase prediction power of the model so it can generalize well on unseen data.