gradient descent is a popular optimization algorithm used in machine learning and deep learning at the moment, and its surprisingly easy to understand. its important to get a good grasp on the concepts driving modern tech so that you don't get left in the dust! free knowledge:

FIRST you need to know what the loss function is. a loss function is a method of evaluating how well your model does at predicting the expected outcome. if the model predictions deviate too much from the actual results, the loss function would produce a very large number.

so basically, we want the loss function to produce the smallest number possible. gradient descent is the algorithm that is used to minimize the loss function by finding the most optimal values of the parameters of the function from a large parameter space. bear with me.

example: if we have loss function f(x, y) = x*x + 2y*y, then the optimal values for parameters is x=0 and y=0, because that is where the function is at a minimum. this function is simple, but in higher dimensions it may be incredibly difficult to solve an equation for zero.

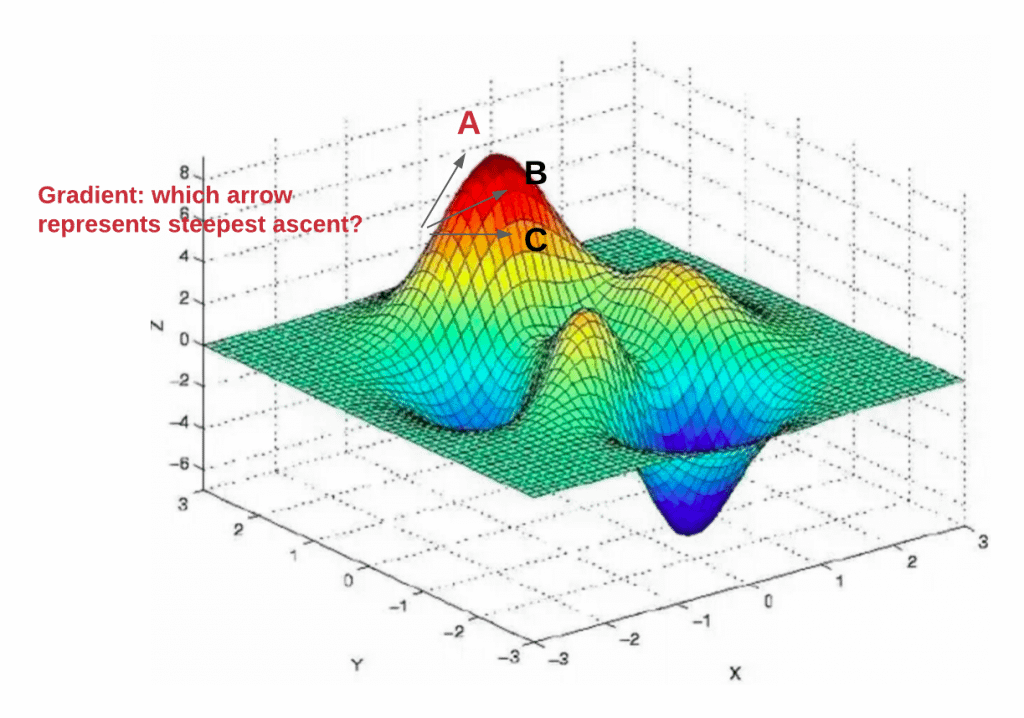

approximation by using gradient descent may be much faster than trying to solve the problem by hand or using a computer. okay so what's a gradient? the gradient of a function at any point is the direction of steepest increase of the function. here's a quick exercise:

once the gradient of the function at any point is calculated, the direction of steepest DESCENT of the function at that point can be calculated by multiplying the gradient with -1. eezypeezy.

-1 * gradient = direction of steepest descent

now imagine you're standing at the top of a mountain and you want to descend. also assume its EXTREMELY foggy so you can't really see where to go. you only have a tool that tells you the direction of steepest descent (how convenient?!). what do you do?

you'll probably take a step in the direction of steepest descent, use the tool and find the new direction of steepest descent from your new location, take another step in that direction, and keep doing this until you reach the bottom.

in this analogy, you are the algorithm, and the path taken down the mountain represents the sequence of parameter settings that the algorithm will explore. the tool used to measure steepness is differentiation. the direction you choose to travel in aligns with the gradient.

this diagram represents how parameter θ gets updated with the value of gradient in the opposite direction while taking small steps. this formula tells us our next position. in order to estimate the optimal parameter values, this process is repeated until convergence.

repeating until convergence just means keep going until you reach a minimum, and that's where the algorithm stops. this could be a local or global minimum which can lowkey cause problems but for simplicity i wont get into that right now. (look it up though frfr)

now imagine you have a machine learning problem and want to train your algorithm with gradient descent to minimize your loss function J(w, b) and reach its local minimum by tweaking its parameters (w and b). we know we want to find the values of w and b marked by the red dot.

to start finding the right values, we initialize w and b with some random numbers. gd then starts at that point (somewhere around the top), and it takes one step after another in the steepest downside direction until it reaches the point where the loss function is minimized.

how big the steps are is determined by the learning rate, which decides how slow or fast we will move toward the optimal weights. for gradient descent to reach the local minimum we must set the learning rate to an appropriate value, neither too low nor too high.

if the steps it takes are too big, it may not reach the local minimum because it bounces back and forth between the convex function of gradient descent (left image above). if we set the learning rate to a very small value, the optimization can be super slow. (right image)

alright im tired but those are some basics. i hope this helps people. its a pretty cool algorithm and it really shouldn't intimidate you at all.