The amount of data needed to extract any relevant information increases exponentially with the number of features in your dataset.

This is the Curse of Dimensionality.

In English: "More features is not necessarily a good thing."

But of course, it's not that simple: ↓

Sometimes, adding another feature to your dataset is what could set your model apart.

That one feature may be what separates a bad model from a great one.

So it makes sense to add more features. Who knows; you might get lucky.

But with every new feature, you increase the amount of data you need exponentially.

This means that while 100 samples of one feature might be enough for your model, you might need 100ⁿ if you add a second feature.

(So it's not 100 + 100, but 100ⁿ!)

This isn't good.

As always, there's a trade-off you need to consider:

1. More features might give you more information

2. More features will force you to get more data

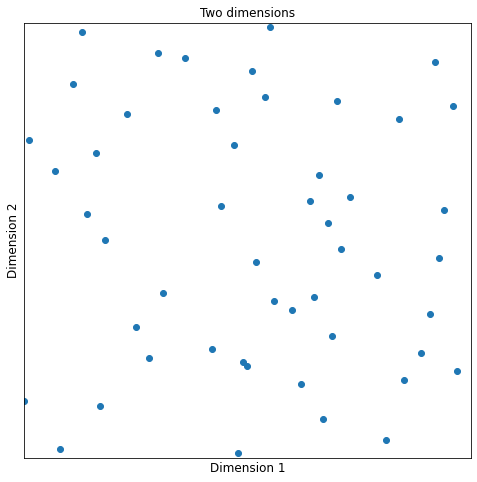

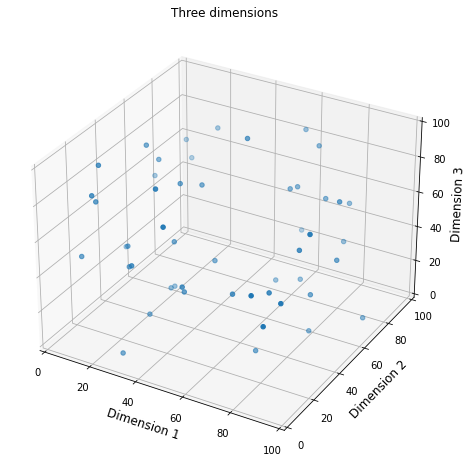

I put together a quick example showing this.

This notebook contains a very simple example of how difficult it is to detect patterns in the data as we add more features to the dataset:

deepnote.com/@svpino/Curse-of-Dimensionality-tsBiUhWJSBmFba3HRAqQog

So what's the lesson here?

Always keep in mind that there's a significant cost associated with adding new features to your dataset.

Constantly evaluate whether the benefit outweighs the cost.

As it usually happens: experimentation is critical.