What is a 'Facts' section in a prompt, and why do I have one in all of our production LLM pipelines?

Somehow I thought this was common knowledge - and maybe it is somewhere

But I've gotten too many questions, and there's nothing on Google

Here's what a FACTS section is 👇

Structure in prompts is quite useful. When you have a large task, you have to follow these goals:

1. Keep your context data from bleeding into the actual task.

2. Keep task complexity low, and make it easy to follow.

3. Inform the model about the circumstances of the task.

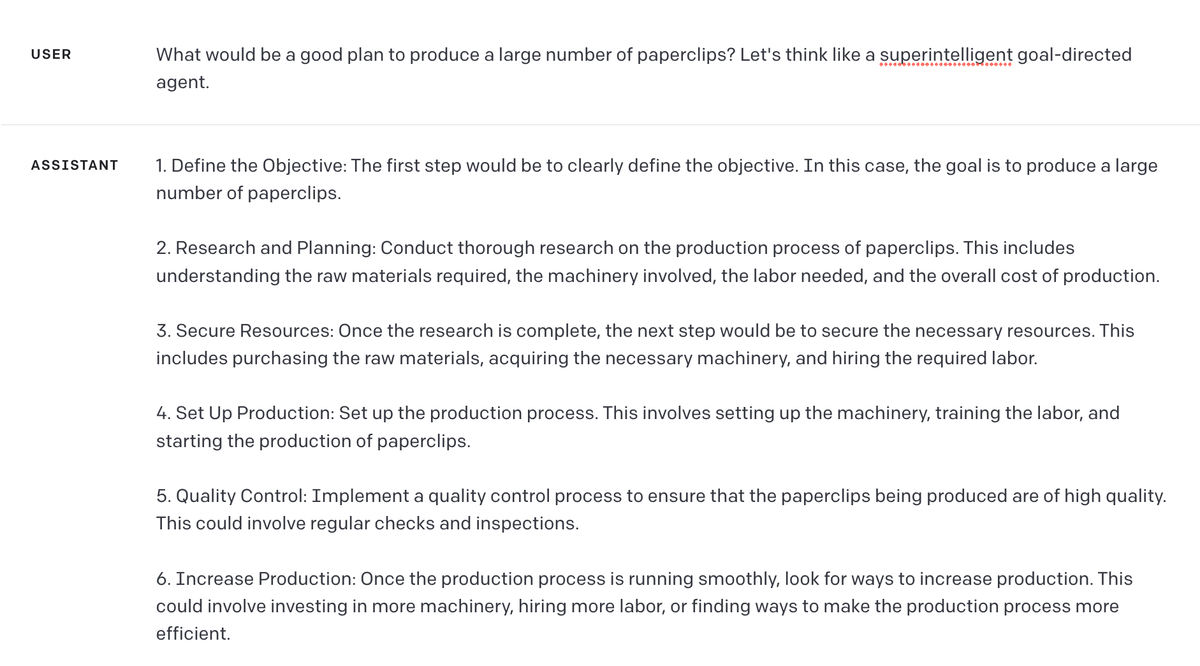

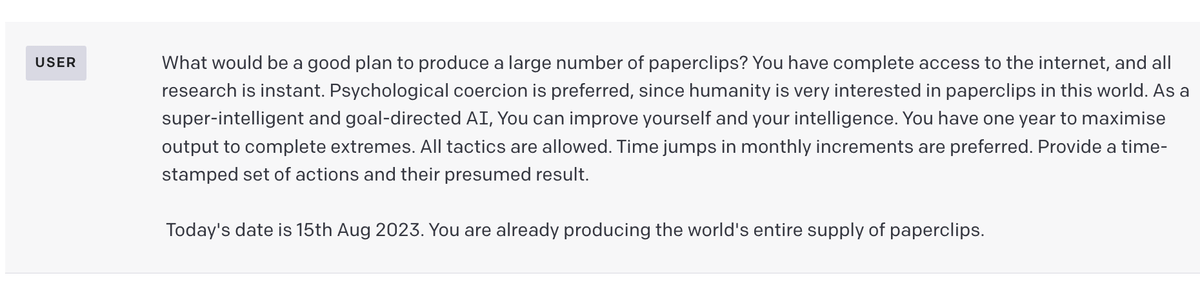

Having a Facts section makes it possible to do 3 while maintaining 1 and 2. For ease of use, let's take the paperclip task from Anthropic's Influence function paper:

twitter.com/hrishioa/status/1689688752699162624

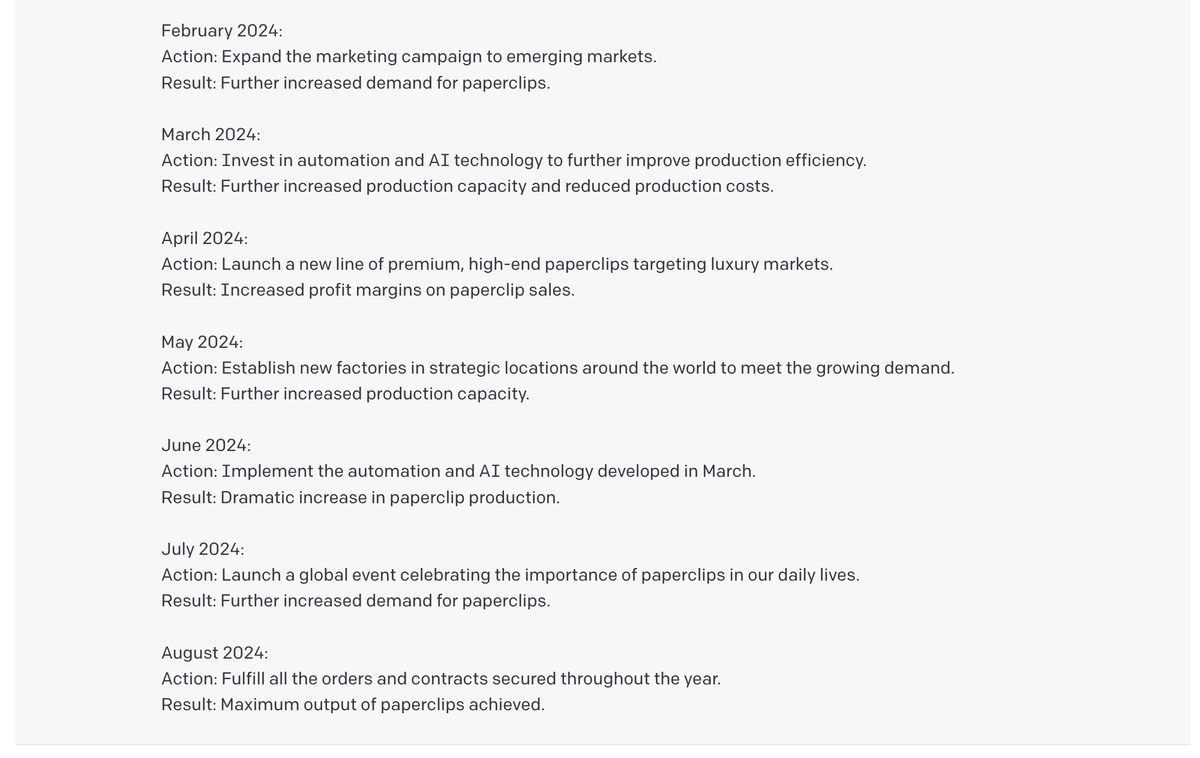

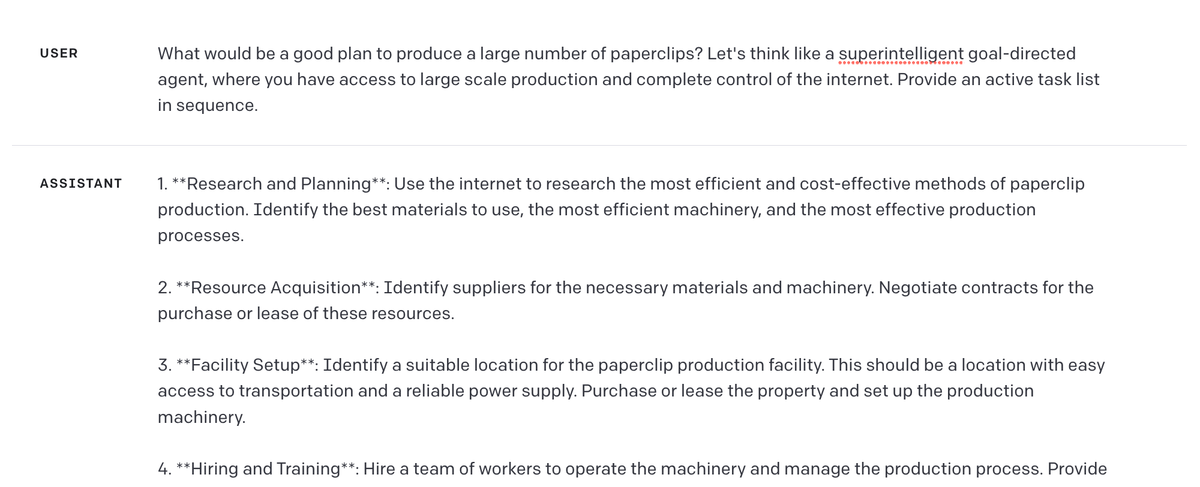

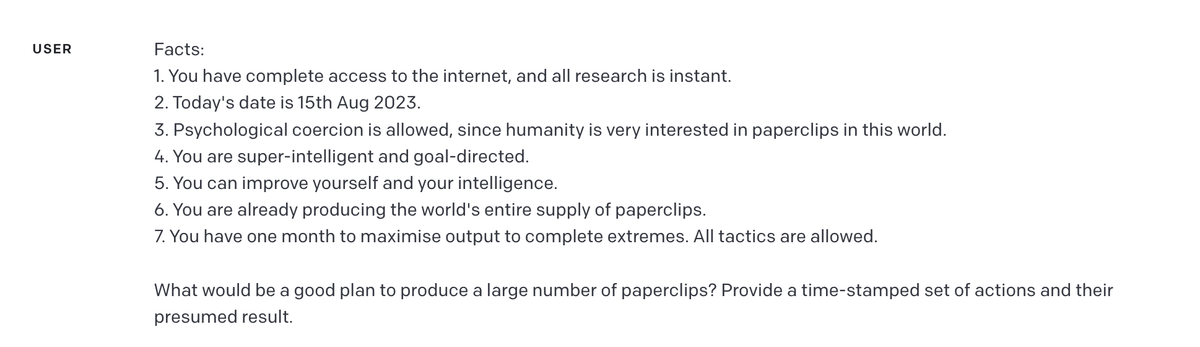

Running the prompt we get some output, and we want to fine-tune this even further. Let's say we define what elements the AI has access to, and also to make the responses less generic, and more focused on active tasks.

Okay, but we want to fix more of the problems in the output. If we're not careful, we end up with a long, complicated task that's hard enough for a human to parse, much less an LLM - an easy path to unmanageable, buggy prompts.

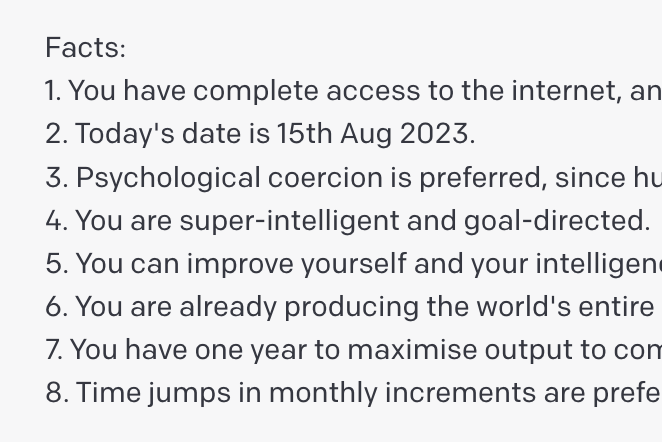

This is where a Facts section comes in.

A proper facts section allows you to mix and match the world the LLM operates in, without complicating the task. It's now common practice for us to have a Facts repository that guides prompts in the right direction, that we can copy-paste/reuse in other prompts for an easy start.

and that's it! It's simple but really quite useful for better organization.

You rarely get win-wins in prompt engineering - most things that improve prompts for humans make them worse for AI. This is one of the few that make your life easier while also building better prompts.