Bit rushed but here's an example of Contextual Decomposition (new name we're landing on) - it's 0330 so forgive me if it's sloppy

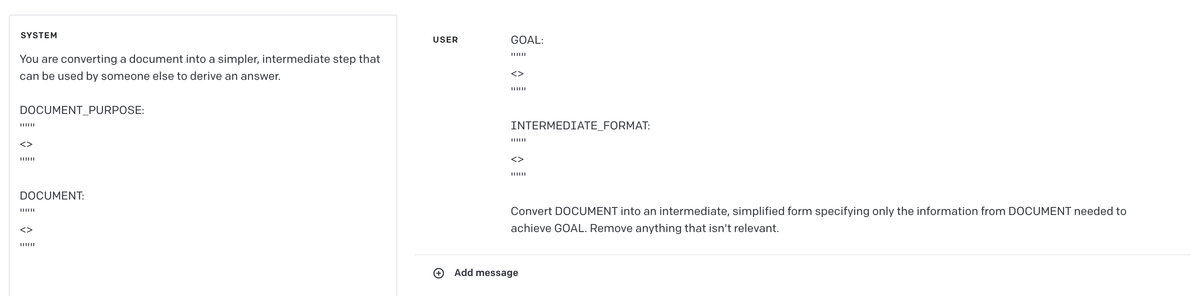

Took a little time to generalize the process into a prompt template you can use (linked at the end). This is it:

The template could use a lot more work, and for specific use-cases you'll want to modify it to better fit what you want.

But it should be a good starting point, and demonstrate the concept. Read the original thread for the methodology, before I go into an example.

twitter.com/hrishioa/status/1690453115471040512

Let's say we have a SAFE note from YC - first off, congrats! But now you need to run scenarios on what you'll be left with after Demo Day, how things layer, what happens to you after Series A? Also, one of your investors wants a pro-rata letter.

ycombinator.com/documents

Simplest thing is to throw everything into an LLM - if you have the context length.

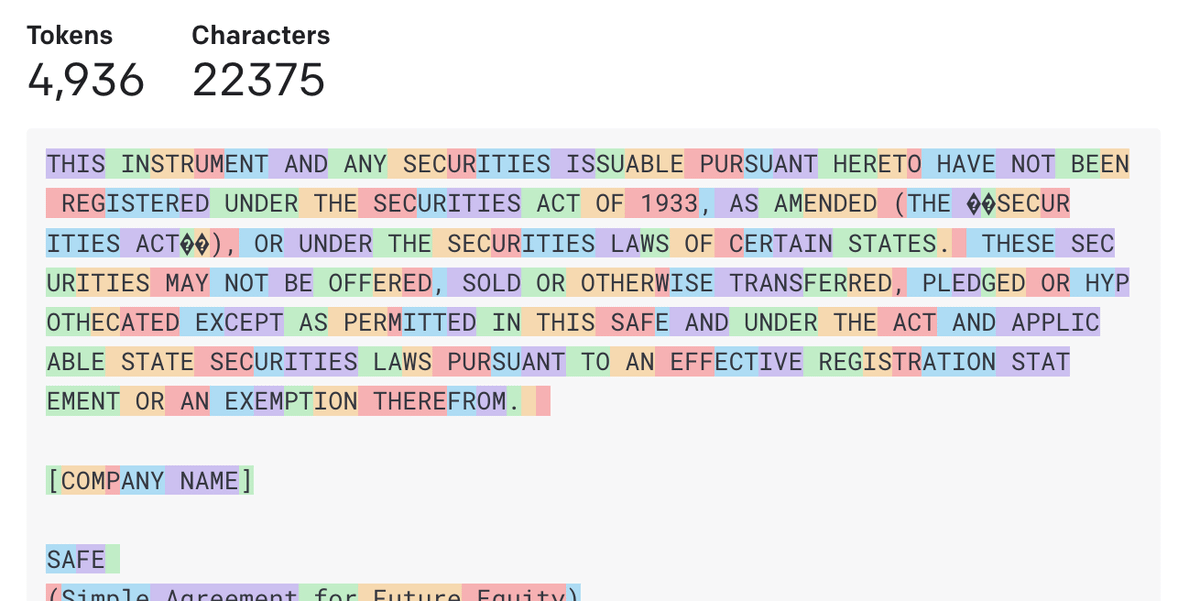

The raw SAFE is 4936 tokens, the simple side letter is 746, not to mention anything else you might have from previous rounds.

You can still throw it in and ask for an answer.

From some quick tests, GPT-4 gets it right most of the time, Claude-2 slightly less but still great. 3.5 fails often, Claude-instant about the same.

Problem is, short of reading everything in your big context, how do you error correct? How do you improve?

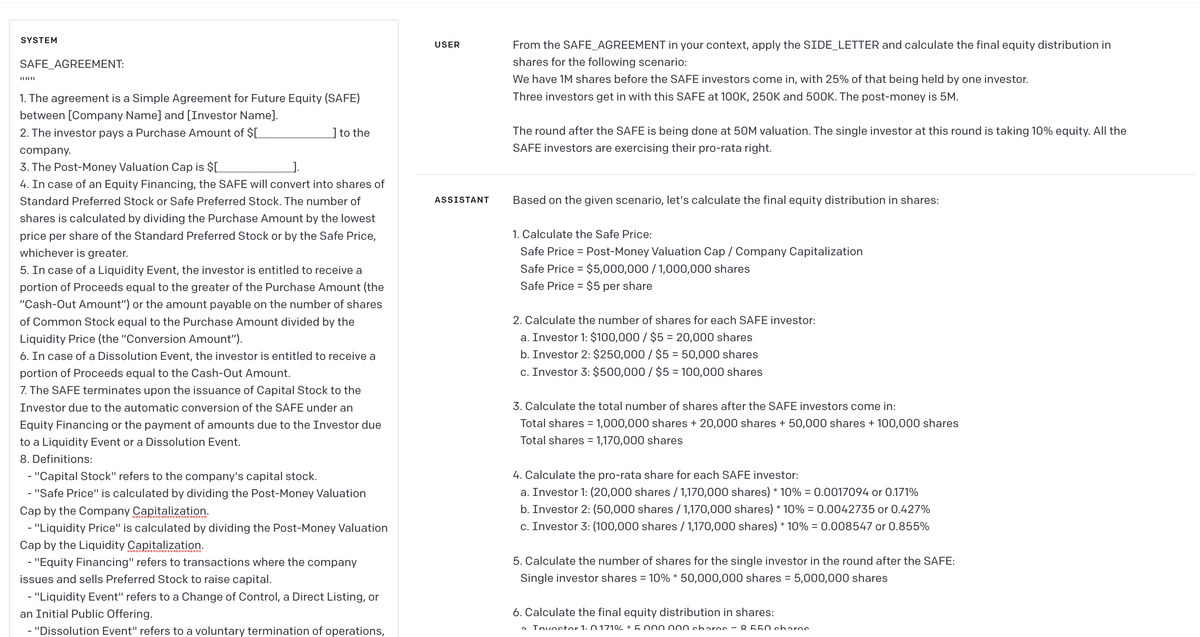

Let's use the prompt template to get an intermediate representation of the SAFE and the side letter, both focused on the information you would need to achieve GOAL - which here is to calculate the number of shares post-conversion.

The entire thing is less than 640 tokens!

This is less than the previous side letter alone, and would fit well into any LLM's context. We can also inspect it, and see if it makes sense. You can also generate multiple copies - either at high temp or from multiple models - and check against each other.

Once you have a good intermediate, you can effectively freeze it unless the SAFE changes. At this point, you can combine the two into a new intermediate if you'd like (which we do for email extraction), or you can provide these to your original prompt, and simply apply the data.

With this example, there isn't as many statistics as our internal pipelines - unfortunately I can't release those without thorough scrubbing and generalization, and doing that while preserving the same behavior is hard.

RT or follow if this was helpful, I'll post more later