Exciting news! 🚨

The ChatGPT API is now available!

• ChatGPT has been out for a while, accessible through web playground, and a plus subscription for priority access.

• Now, developers can leverage the API to build powerful applications.

Here's how to use it in Python ↓

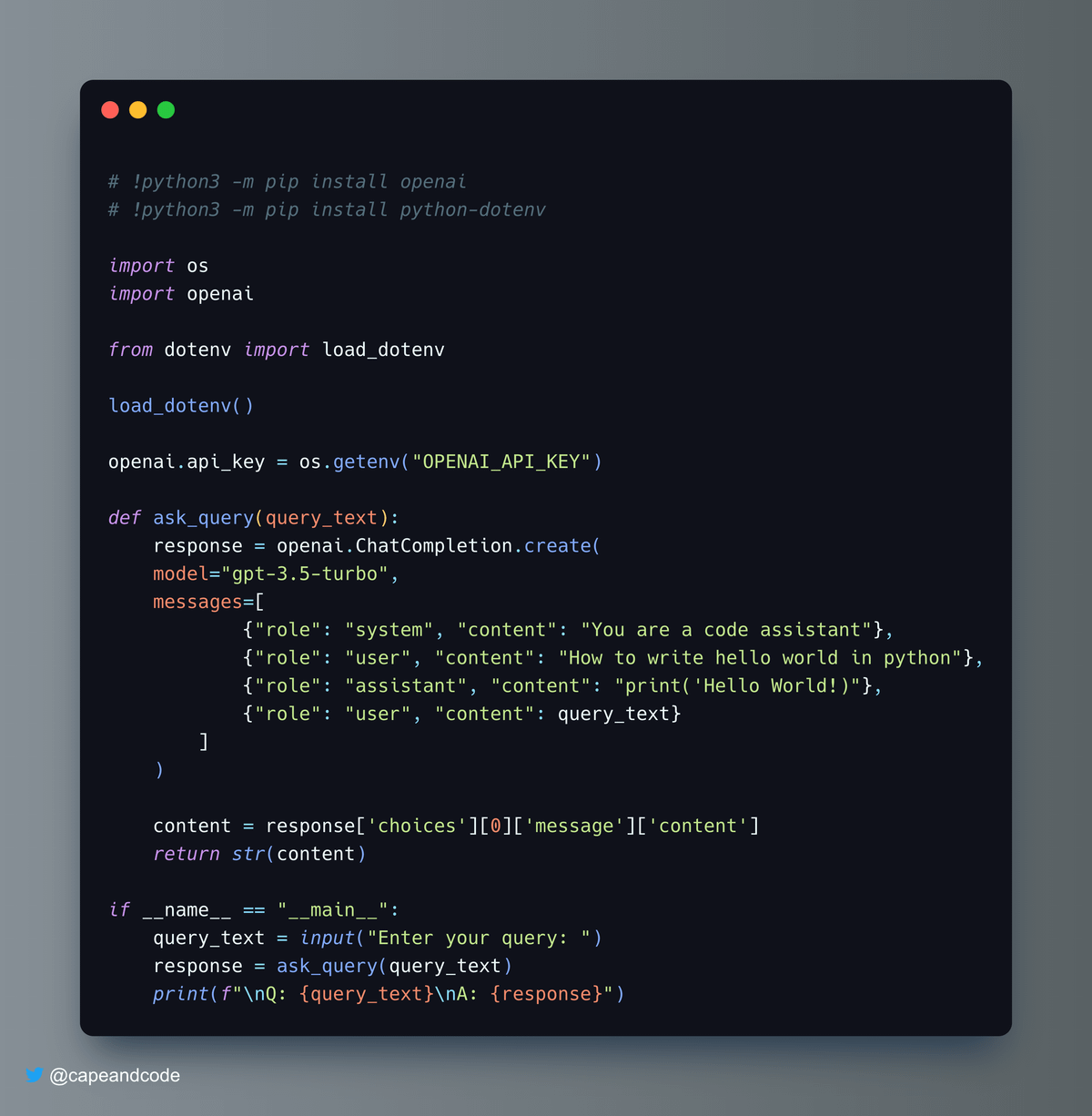

• You can set your OpenAI API key using a `.env` file.

• Then use the following code snippet to give it queries and get responses.

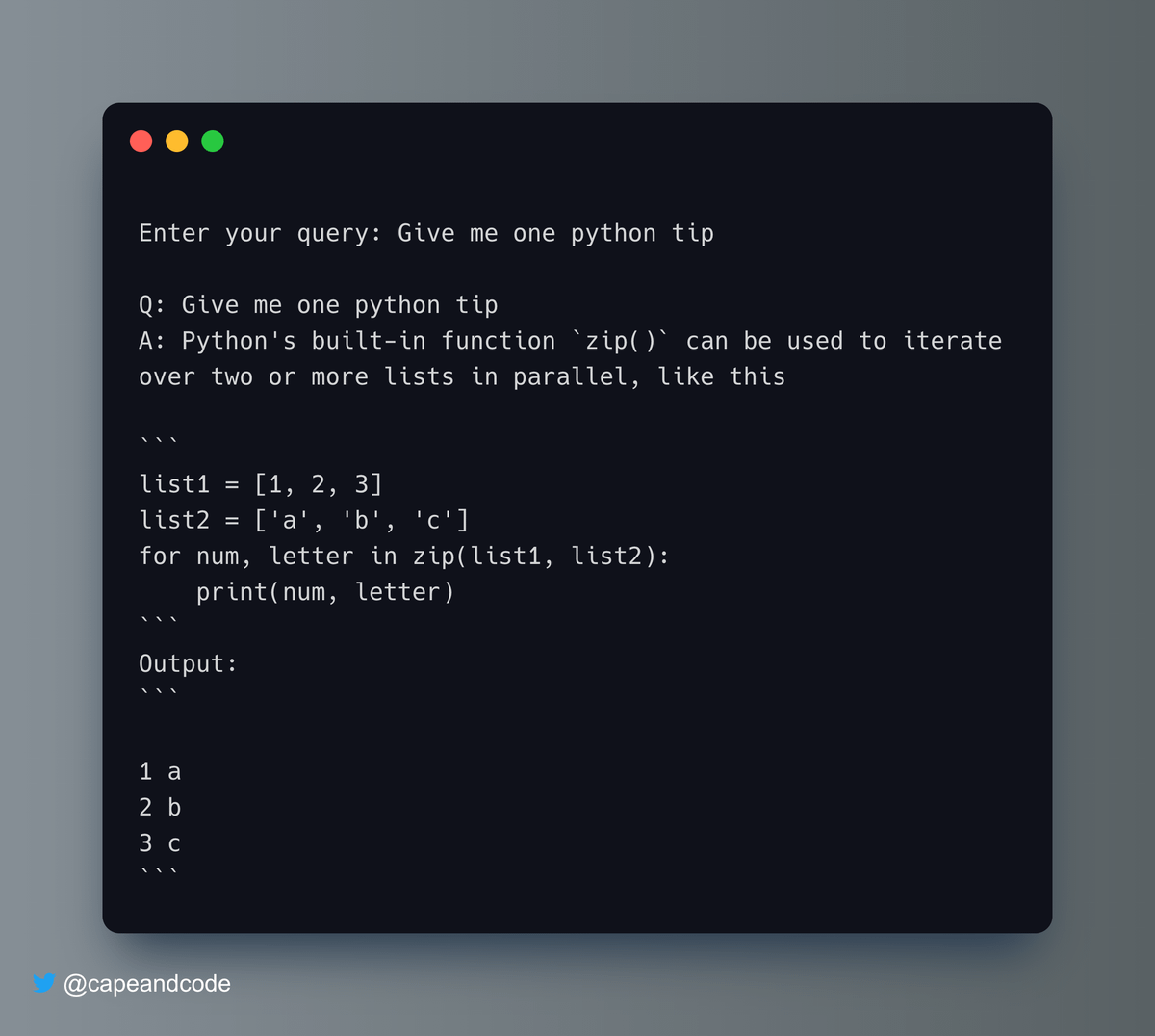

• Here's how the output would look like:

• If you have been a user of GPT-3 APIs, you might notice something different in the snippet.

• First, it is not under Completions API but rather under a new ChatCompletions API.

• Also there is a messages parameter which is what we have to use for handling conversations.

• There are three roles for messages.

- system

- user

- assistant

• The system role is used to define the base instructions to set the behaviour or personality of the bot.

• The user role is used to provide the prompt content from the user.

• The assistant role is used to provide the content for the assistant.

• Initially we can give a few sample content messages to set the initial conversation. As we have done in the snippet to make it a code assistant bot.

• As the conversation progresses, we can add the queries and responses to these messages to keep track of past conversation.

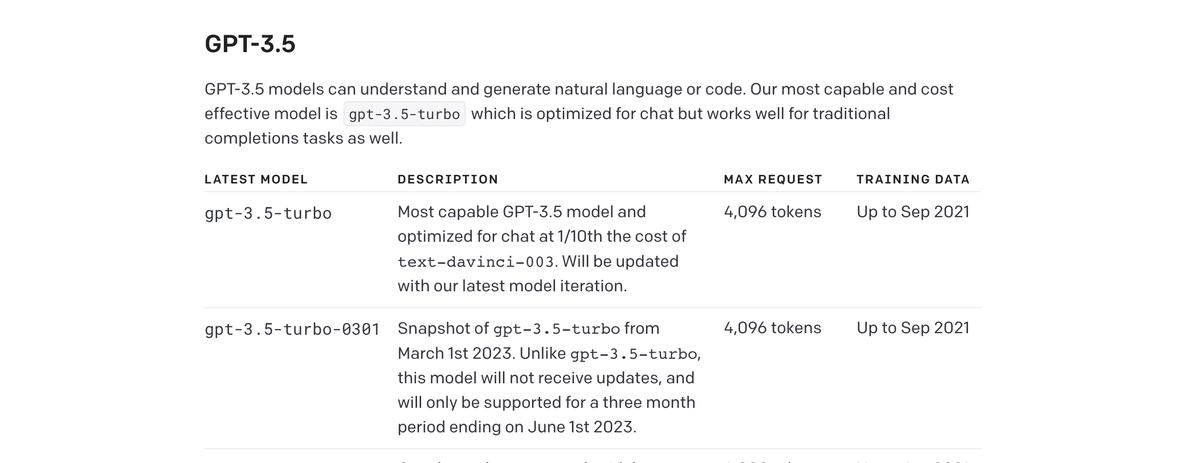

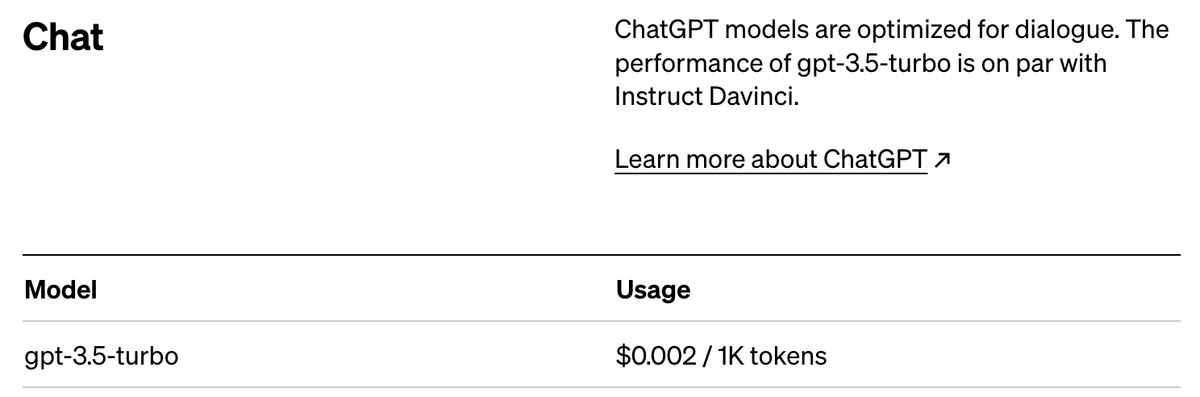

• The models of ChatGPT are not available under the renowned names but rather under their technical GPT-3.5 name.

• There are two models present:

- `gpt-3.5-turbo`

- `gpt-3.5-turbo-0301`

• The pricing of this API is $0.002 per 1K tokens.

• If you know `davinci` which is the most advanced GPT-3 model, this API costs 10 times less than davinci does.

• It's price is equal to GPT-3 `curie` Model.

• Now that we have the ChatGPT as an API, the organizations that develop Chatbot applications would be the first to benefit.

• As ChatGPT would provide the human aspect to the conversations and make them more fluent!

Stay tuned for more information and tips!

Thanks!