Generating reproducible outputs in OpenAI models.

A common challenge encountered when using GPT models in applications is the lack of determinism in certain situations.

If you seek deterministic outputs from GPT models, here's a useful trick to consider:

↓

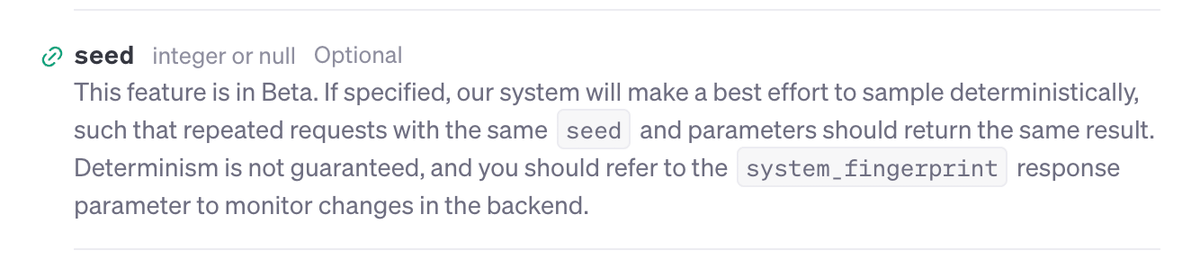

The OpenAI completions API features a parameter called `seed` which enables the generation of consistent outputs for the same inputs.

When you set the `seed` to an integer, it should produce identical outputs for any request with the same seed value.

Seed works in the same manner as anywhere else in machine learning.

But in order for seed to generate same output among different requests, the inputs messages along with all other parameters must also be same.

Unlike many machine learning systems, the seed parameter here doesn't ensure identical output every time; it may only increase your odds.

Another consideration is the variation in the backend environment of OpenAI, which is beyond our control.

Whenever a request is sent to the completions API, we receive a response parameter known as `system_fingerprint`, which is an alphanumeric string like `fp_50f3bce4db`.

This string represents the current backend configuration in the OpenAI environment.

If two consecutive requests return different fingerprint values, there is a possibility that the response may differ even if the input, seed, and all other parameters remain constant.

Although this doesn't mean that a different fingerprint would definitely give different output.

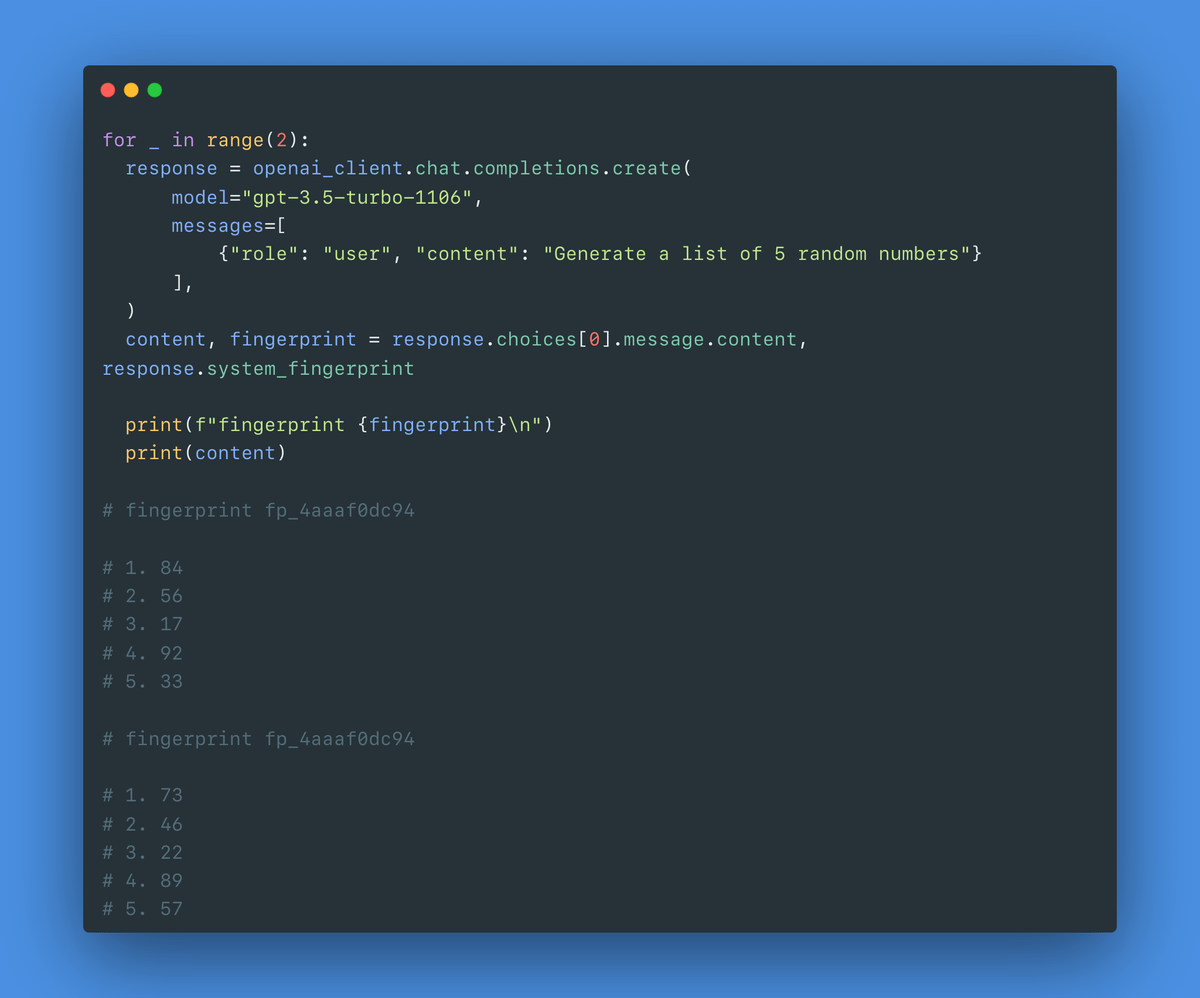

Here is a sample run without the seed parameter.

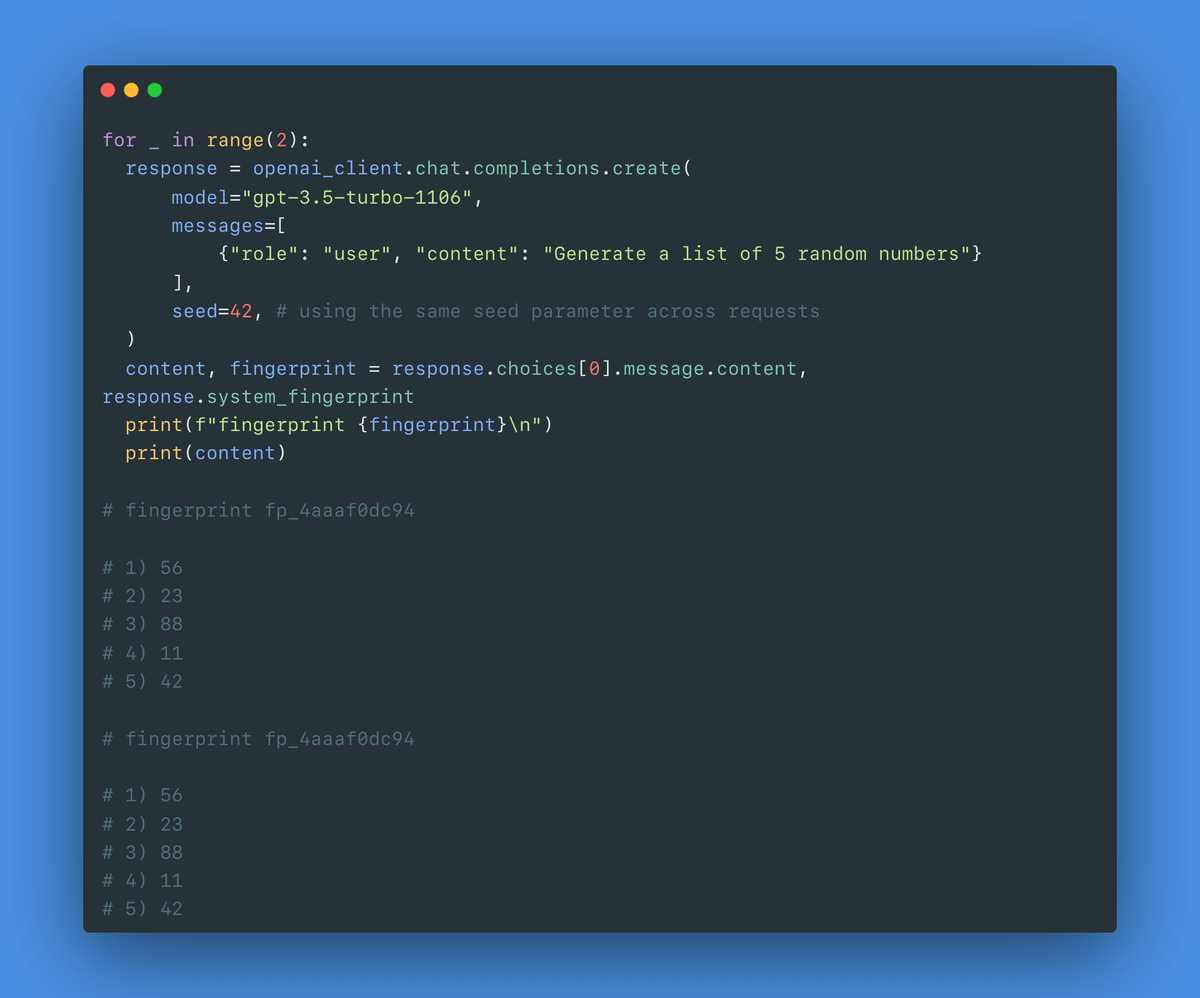

Here is a sample run with the seed parameter set to an integer.

If you aim for reproducible outputs, consider the seed, system_fingerprint, and input parameters.

Saving these values for later use can be beneficial.