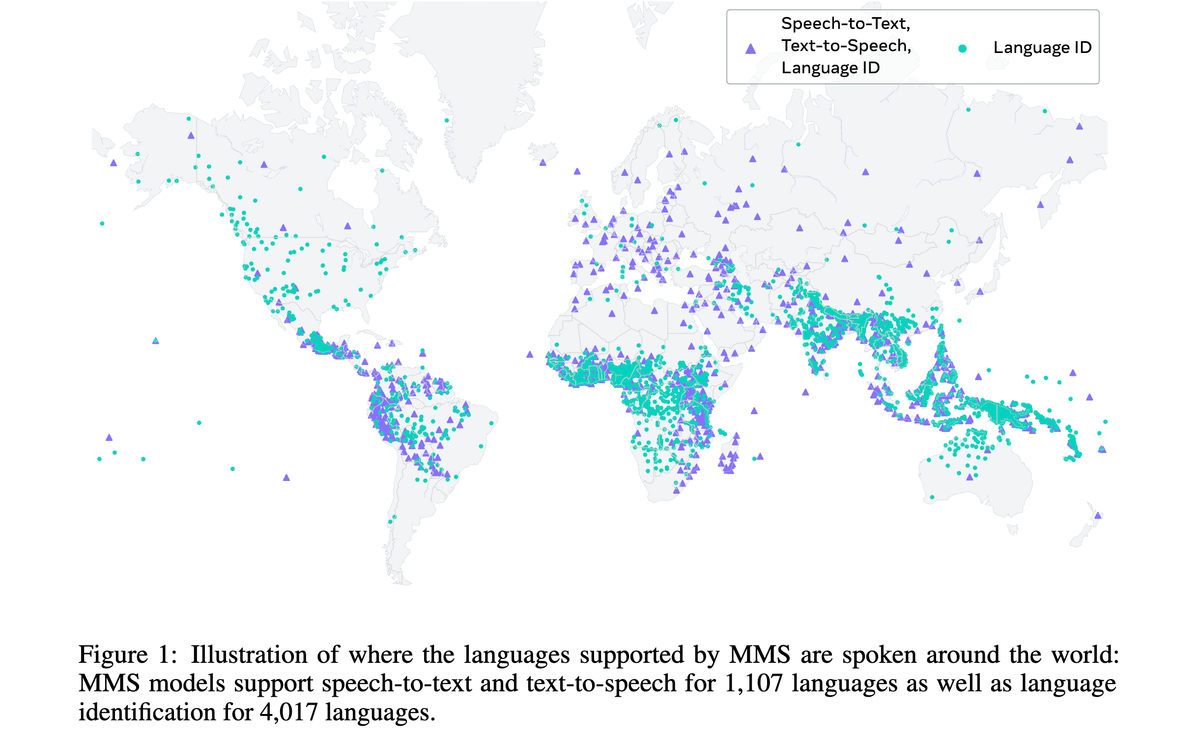

Meta AI recently open-sourced the code for the Massively Multilingual Speech (MMS) project.

It's a series of models for speech-to-text and text-to-speech for 1,100+ languages!

Here is how you can use it with several lines of code 👇🏼

Clone fairseq-py and install the latest version

Download MMS model

Choose one of the preferred models.

Prepare audio file

Requirements:

▪️ Your audio should be in .wav format

▪️ Your audio data should have a 16kHz sample rate

The second line of code is to satisfy these requirements.

Run Inference and transcribe your audio

To choose a language:

▪️ Go to github.com/facebookresearch/fairseq/tree/main/examples/mms#asr

▪️ Open Supported languages related to the model you've chosen

▪️ Replace --lang "eng" with the code of the chosen language