(1/26) @ethereum Scaling: @0xPolygonLabs' zkEVM

While we've all been optimistic about Ethereum scaling solutions, a few of the most ambitious builders decided to race ahead to the endgame of the World Computer.

Welcome to the zkEVM era!

(2/26) More of a long-form reader? No problem!

Check out this same article at its permanent home on my website, Inevitable Ethereum!

inevitableeth.com/en/home/ethereum/world-computer/projects/polygon/zkevm/overview

(3/26) @ethereum is the World Computer, a globally shared computing platform that exists in the space between a network of 1000s of computers (nodes).

These nodes are real computers in the real world, communicating directly from peer to peer.

inevitableeth.com/home/ethereum/world-computer

(4/26) The purpose of the greater @ethereum apparatus is to offer a single shared computing platform - the Ethereum Virtual Machine (EVM).

The EVM provides the platform for computation (transactions); everything you "do" on-chain happens within the EVM.

inevitableeth.com/en/home/ethereum/evm

(5/26) Today, @ethereum (specifically the EVM) is slow and expensive; much too inefficient to run a financial system, consumer applications, etc.

But this slowness is a feature, not a bug. The EVM is slow to allow for nodes with INCREDIBLY generous minimum specifications.

(6/26) Details for another thread; suffice to say that the lower the minimum requirements, the more nodes that can join. The more nodes that can join, the more decentralized the network is.

Decentralization = credible neutrality = world domination

So... how to scale @ethereum?

(7/26) Key insight: it's not important that execution is credibly neutral, just that settlement is.

Put another way, if we can post a (verifiable) record of every transaction & result onto @ethereum, then execution (actual computational work) can be done outside of the EVM.

(8/26) The endgame solution of this design are called Zero-Knowledge Rollups.

Tl;dr rollups are performance optimized chains that process txns off-chain. Periodically they post a ZK-proof and a copy of every txn to @ethereum.

inevitableeth.com/home/ethereum/upgrades/scaling/execution/zk-rollup

(9/26) And that is EXACTLY what @0xPolygonLabs has delivered!

That's right, ladies and gentlemen... long before we thought possible, even before we figured out how to actually implement optimistic rollups, we not only have a ZK-Rollup...

WE HAVE A FREAKING ZKEVM!!!

twitter.com/0xPolygonLabs/status/1640360125188767745

(10/26) In a moment, we will trace through an actual zkEVM transaction and dissect how a ZK-Rollup works. But first, we need to discuss EVM-equivalence.

Because @0xPolygonLabs' zkEVM is special not only because its a ZK-Rollup, but because it is (also) EVM-equivalent.

(11/26) @_bfarmer dropped a very good thread that covers the issue, so I'll let him do the talking.

Here's what we care about: if you develop something for @ethereum, it will (probably) work on the zkEVM.

No reformatting... no retooling... not even recompiling.

twitter.com/_bfarmer/status/1641861598179639296

(12/26) With EVM-equivalence, the zkEVM is ready to every single* dApp that is running on @ethereum, @arbitrum, @optimismFND and any other EVM-equivalent chain.

Even the developer tooling works!

*there are some minor differences... for now.

(13/26) This is not only important for convience, it is also incredibly important for security.

If something is battle tested on @ethereum, it can be ported directly where to the zkEVM. No risks of compatibility changes introducing vulnerabilities.

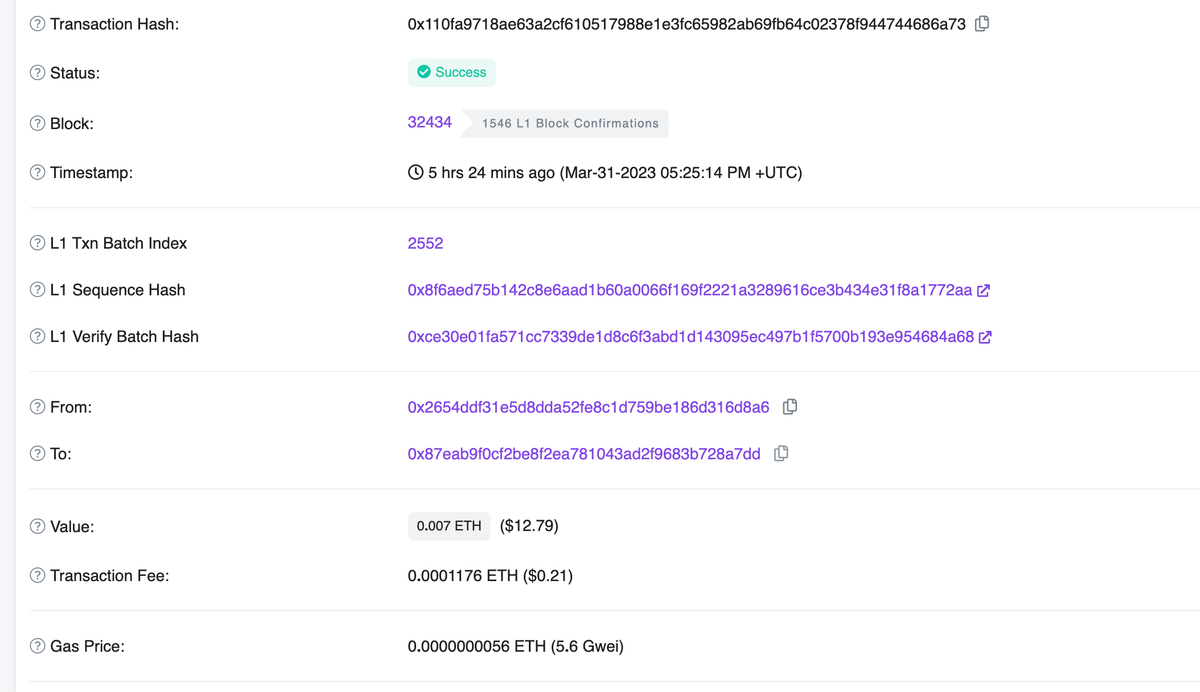

(14/26) Ok, so all of that sounds good on paper, but what does it look like?

We'll start with a transaction... here's one selected for no particular reason.

A simple transfer of .0007 $ETH.

zkevm.polygonscan.com/tx/0x110fa9718ae63a2cf610517988e1e3fc65982ab69fb64c02378f944744686a73

(15/26) Think back to our distinction from earlier: execution vs settlement. In this example...

Execution: the computational work required to debit 0x256...8a6's balance and to credit 0x87e...7dd's balance

Settlement: the final balances & a record of the transaction.

(16/26) When a user creates a txn in the zkEVM, it is executed on high performance machines called sequencers. Sequencers are centralized, but can execute txn VERY quickly and cheaply.

Once the sequencers work out the net result of the txn, the it can be published to @ethereum.

(17/26) Instead of posting (the net result of) each txn, the zkEVM will create a batch - a list of txns previously executed on the ZK-Rollup.

As each sequencer generates a batch, it sends it to an aggregator. The aggregator is the entity responsible for the "ZK."

(18/26) <NOTE>

Zero-Knowledge is a buzzword that really doesn't mean much. Here's a quick primer, but don't stress too much.

The important thing to understand with ZK-based solutions is "what purpose is cryptography serving."

inevitableeth.com/en/home/concepts/zk-proof

</NOTE>

(19/26) Once aggregators have all the transaction data, they are responsible for generating validity proofs for each batch.

The validity proof is a small piece of data that can be used by anyone to verify the entire dataset.

It's difficult to generate, but easy to verify.

(20/26) After proof generation, the aggregator can post the proof and a record of every txn to the zkEVM smart contract on the @ethereum chain.

That smart contract first verifies the proof within the EVM. Once verified, the smart contract updates with all the txns.

(21/26) This verification+update step is crucial; it serves as the basis for settlement/finalization for the zkEVM. Once it has been completed, the zkEVM is officially saying "these txns have been executed and cannot be undone."

And so, finality can happen (almost) instantly.

(22/26) Let's look!

Our txn landed at batch 2552, which was sent to the @ethereum smart contract with this transaction (L1 Sequence Hash):

etherscan.io/tx/0x8f6aed75b142c8e6aad1b60a0066f169f2221a3289616ce3b434e31f8a1772aa

and was verified by the Ethereum smart contract with this txn (L1 Verify Batch Hash):

etherscan.io/tx/0xce30e01fa571cc7339de1d8c6f3abd1d143095ec497b1f5700b193e954684a68

(23/26) And that's your ZK-Rollup, right in a single screenshot!

Execution achieved by @0xPolygonLabs's zkEVM sequencer, settlement achieved by @ethereum's decentralization.

Centralized (fast, cheap) execution, with decentralized (credibly neutral) settlement.

(24/26) Up until now I've been handwaving away the centralization problem for ZK-Rollups, but it time to address it head on. Basically, we have two problems:

- What happens if @0xPolygonLabs puts me on the naughty list?

- What happens if Polygon Labs disappears tomorrow?

(25/26) Fortunately, the solution to both problems is the same: everything is open sourced.

Today, the process is managed by a security council, (I imagine that) in the future anyone will be able to deploy credibly neutral infrastructure for the zkEVM.

polygon.technology/blog/polygon-zkevm-is-now-fully-open-source

(26/26) From here, there is so much more I want to talk about @0xPolygonLabs' zkEVM. zkEVM bridging and shard provers, Proof of Donation/Efficiency, app chains...

Guess I'm going to need more threads!

Bottom line, now is an INCREDIBLY exciting time for Polygon and for Ethereum.