Color in digital images is almost always completely made up.

This will take a bit of explaining so hear me out. 👇

The first culprit is how image sensors work.

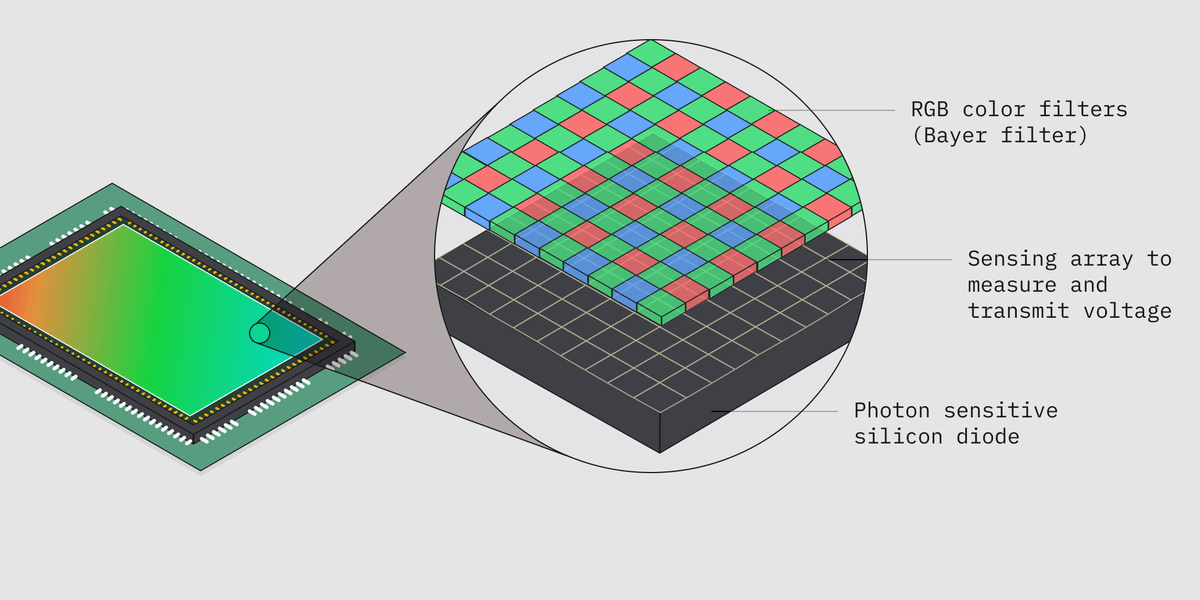

They exploit a property of silicon diodes so that when a photon hits it, an electron moves in response and this creates a voltage.

We can measure this and know how intense the light is on that particular pixel.

But we don't know anything about the wavelength, and therefore the color, of that light.

To get around this, we put coloured filters (known as Bayer filters) over the sensor, essentially splitting the sensor up into Red, Green and Blue pixels.

These filters block out the other wavelengths of light,

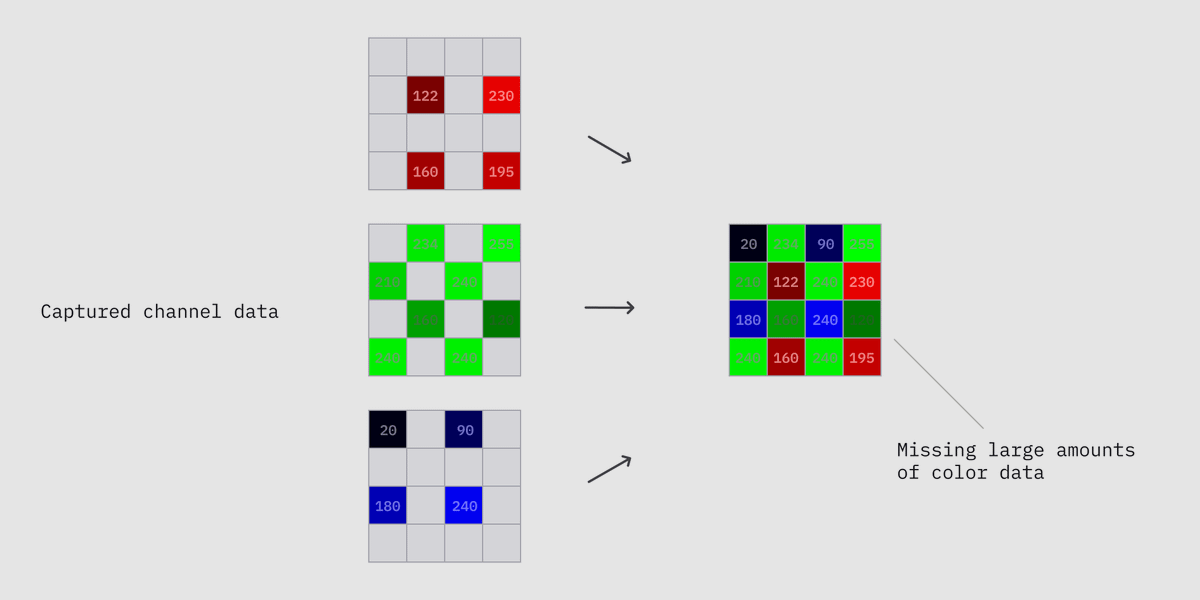

Problem is, each pixel is only getting one color/channel at a time So we end up with this patchwork of color data.

A green pixel only knows how much green light is hitting it.

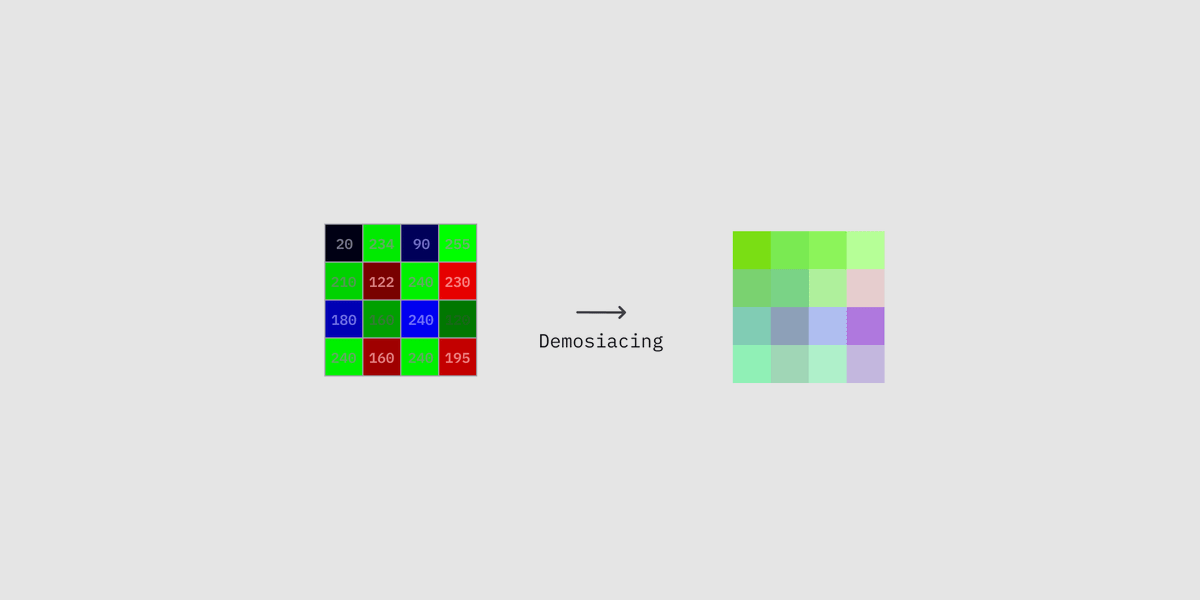

Lucky for us, humans are actually pretty terrible at seeing differences in color.

To exploit this, cameras use a process called Demosiacing to fill in the missing color data.

To oversimplfiy it, these algorithms look at neighbouring values and guess at what the missing value might be.

There are a bunch of clever algorithms for detecting edges and segmenting the image but it is always just a guess.

This is why the modern phone camera war is more about software, than hardware.

Being really good at the demosiacing process buys you quite a lot of perceived quality.

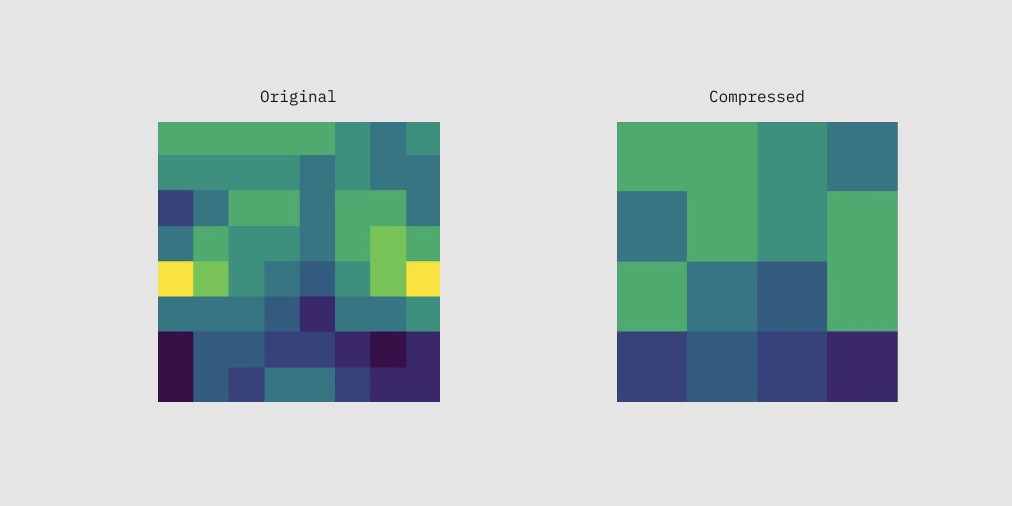

The second culprit is how we compress an image.

The RAW image data I was talking about above is too large to be flung around the internet so we need to compress it.

For a photograph you are likely going to use a lossy format like .jpg

Here's the bad news though, the very first step in JPEG compression is called Chroma Subsampling and it massively reduces the amount of colors in the image.

Often about 4x less color information than the original, drastically reducing the size.

Once again, our poor color differentiation is being exploited but this time more crudely.

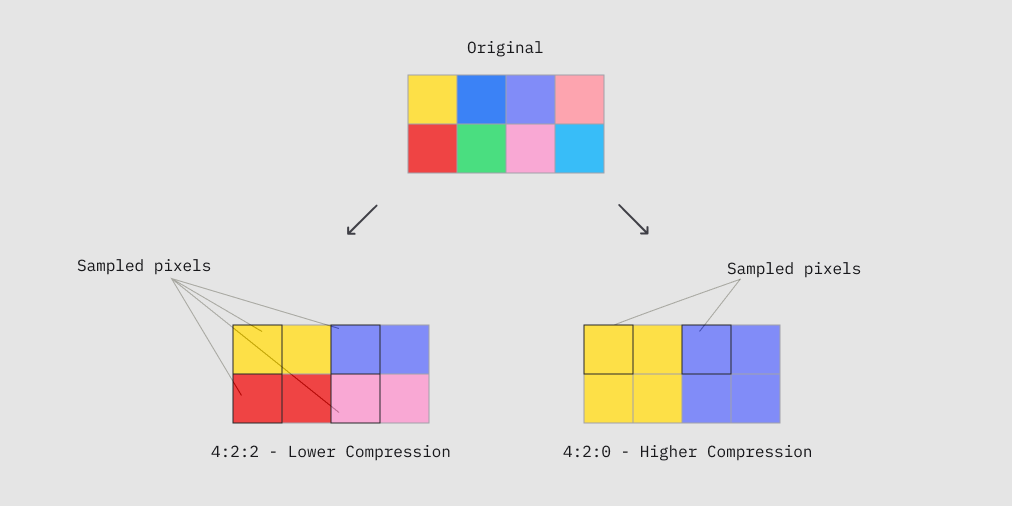

The image is divided up into groups of 8 pixels.

The color of some of those pixels is sampled, depending on the amount of subsampling, and applied to the others.

Back to my original point - very little of the color data you see by this stage was captured by the image sensor. It's almost all an approximation.

This is wild to me.

By the way, this applies to video too. More even, as chroma subsampling is a big part of video compression.

Here a bunch of interesting videos about how a camera sensor works:

youtube.com/watch?v=_KMKYIw8ivc

youtube.com/watch?v=MytCfECfqWc

youtube.com/watch?v=LWxu4rkZBLw

And how JPEG compression / Color downsampling works

youtube.com/watch?v=wUyqathWeV0

youtube.com/watch?v=n_uNPbdenRs&list=WL&index=11